So far my life has been rather extraordinary in that through great underserved luck1 I have been present at, or nearby to, many of the defining technological advances in computer science, Artificial Intelligence, and robotics, that now in 2021 are starting to dominate our world. I knew and rubbed shoulders2 with many of the greats, those who founded AI, robotics, and computer science, and the world wide web. My big regret nowadays is that often I have questions for those who have passed on, and I didn’t think to ask them any of these questions, even as I saw them and said hello to them on a daily basis.

This short blog post is about the origin of languages for describing tasks in automation, in particular for industrial robot arms. Three people who have passed away, but were key players were Doug Ross, Victor Scheinman, and Richard (Lou) Paul, not as well known as some other tech stars, but very influential in their fields. Here, I must rely not on questions to them that I should have asked in the past, but from personal recollections and online sources.

Doug Ross3 had worked on the first MIT computer, Whirlwind, and then in the mid nineteen fifties he turned to the numerical control of three and five axis machine tools–the tools that people use to cut metal parts in complex shapes. There was a requirement to make such parts, many with curved surfaces, with far higher accuracy than a human operator could possibly produce. Ross developed a “programming language” APT (for Automatically Programmed Tool) for this purpose.

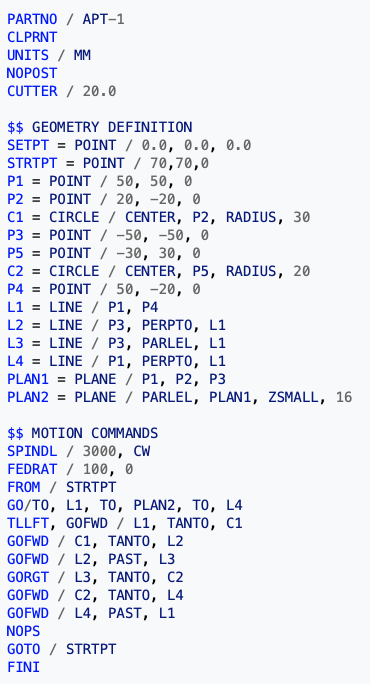

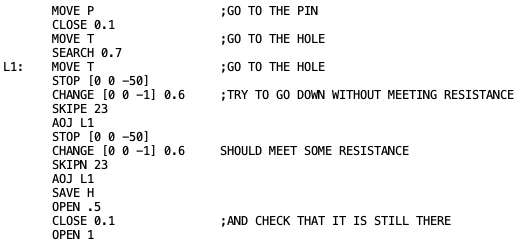

Here is an APT program from Wikipedia.

There are no branches (the GOTO is a command to move the tool) or conditionals, it is just a series of commands to move the cutting tool. It does provide geometrical calculations using tangents to circles (TANTO) etc.

APT programs were compiled and executed on a computer–an enormously expensive machine at the time. But cost was not the primary issue. APT was developed at the MIT Servo Mechanism Lab, and that lab was the home of building mechanical guidance systems for flying machines, such as ICBMs, and later the craft for the manned space program flights of the sixties.

Machine tools were existing devices that had been used by humans. But now came new machines, never used by humans. By the end of that same decade work was proceeding by two visionaries, one technical and one business, working in partnership, to develop a new class of machine, automatic from the start, the industrial robot arm.

The first arm, the Unimate, developed by George Devol and Joe Engelberger4 at their company Unimation, was installed in a GM factory in New Jersey in 1961, and used vacuum tubes rather than transistors, analog servos for its hydraulics rather than the digital servos we would use today, and simply recorded a sequence of positions to which the robot arm should go. Computers were too expensive for such commercial robots, so the arm had to be carefully built to not require them, and there was therefore no “language” used to control the arms. Unimates were used for spot welding and to lift heavy castings into and out of specialized machines. The robot arms did the same thing again and again, without any decisions making taking place.

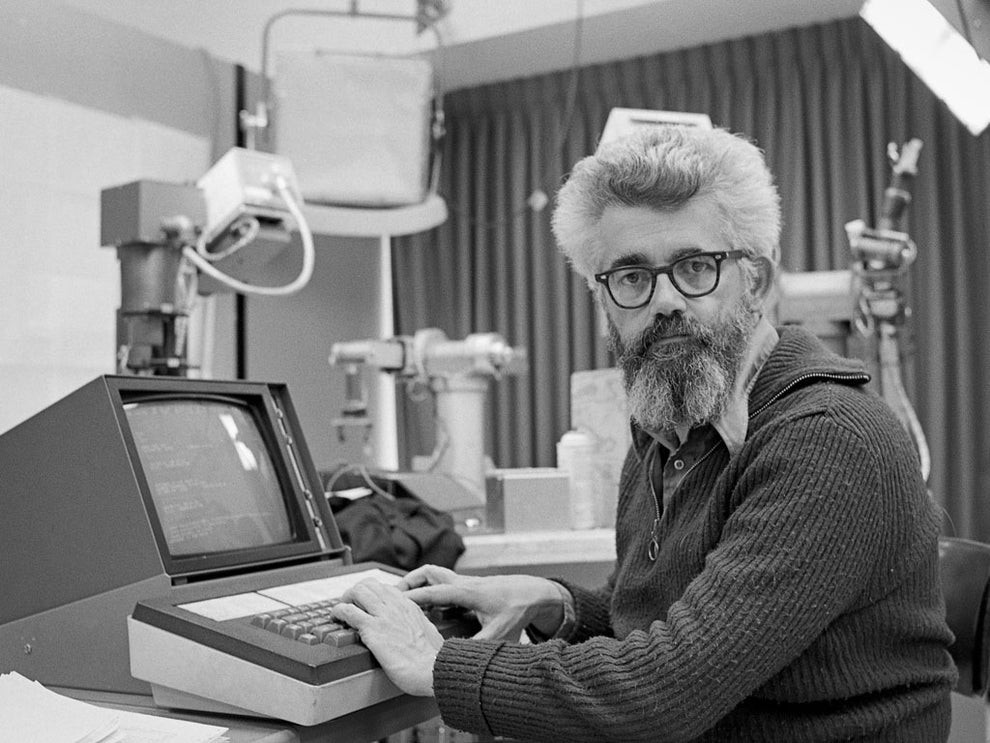

In the late sixties, mechanical engineer Victor Scheinman at the Stanford AI Lab designed what became known as the Stanford Arm. They were controlled at SAIL by a PDP-8 minicomputer, and were among the very first electric digital arms. Here is John McCarthy at a terminal in front of two Stanford Arms, and a steerable COHU TV camera:

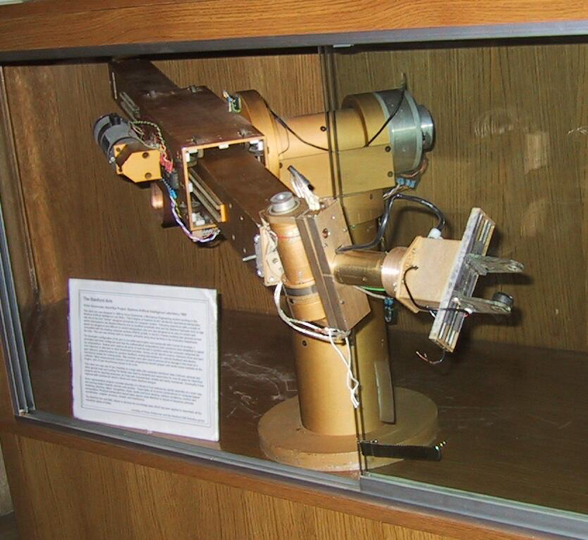

In the five years (over a period of seven years total) that I was a member of the Hand-Eye group at SAIL I never once saw John come near the robots. Here is a museum picture of the Gold Arm. It has five revolute joints and one linear axis.

The only contemporary electric digital arm was Freddy at Edinburgh University.

While the Stanford Arms (the Gold Arm, the Blue Arm, and the Red Arm) were intended to assemble real mechanical components as might be found in real products, Freddy was designed from the start to work only in a world of wooden blocks.

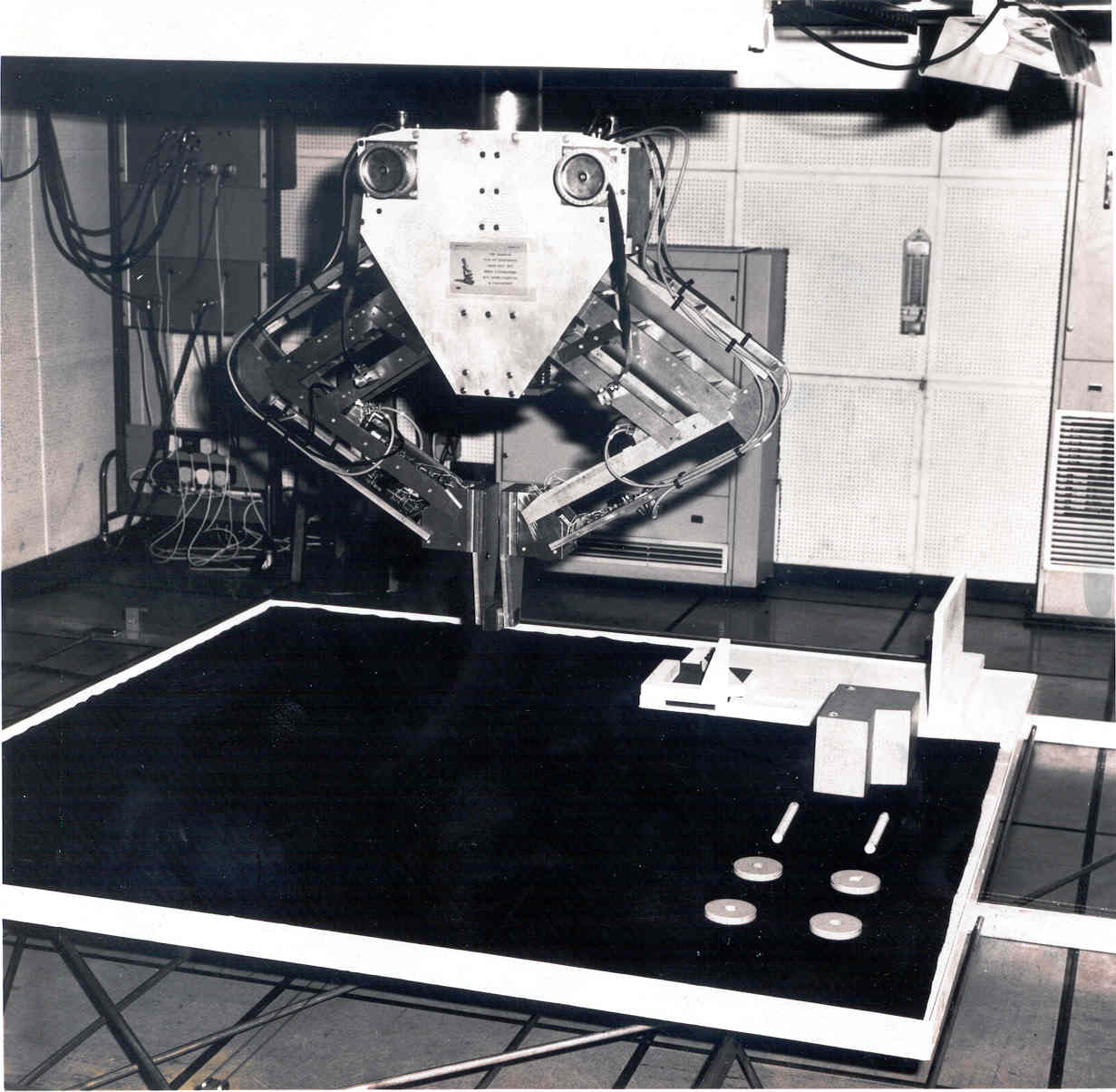

There was actually a fourth Stanford Arm built, the Green Arm. It had a longer reach and was used at JPL as part of a test bed for a Mars mission that never happened. In this 1978 photo the arm was controlled by a PDP-11 mini computer on a mobile platform.

The Stanford Arms were way ahead of their time and could measure and apply forces–the vast majority of industrial robotic arms can still not do that today. They were intended to be used to explore the possibility of automatic assembly of complex objects. The long term intent (still not anywhere near met in practice even today) was that an AI system would figure out how to do the assembly and execute it. This was such a hard problem that first (and still today in practice) it was decided to try to write programs to assemble parts.

Richard (Lou) Paul, who left the Hand-Eye group right before I arrived there in 1977 (I knew him for decades after that), developed the first programming language for control of a robot arm trying to do something as sophisticated as assembly. It was called WAVE. I suspect the way it came out was all based on a nerdy pun, or two, and it even today impacts the way industrial robots are controlled. Besides the language, Paul also developed inverse kinematics techniques, which today are known as IK solvers, and used in almost all robots.

By the late sixties the big US AI labs all had PDP-10 mainframes made by Digital Equipment Corporation in Massachusetts5. The idea was that Paul’s language would be processed by the PDP-10, and then the program would largely run on the PDP-8. But what form should the language take? Well, the task was assembly, as in assembling mechanical parts. But many programs in those days were written in machine specific “assembly language” for assembling instructions for the computer, one by one, into binary. Well, why not make the assembly language look like the PDP-10 assembly language??? And the MOVE instruction which moved data around in the PDP-10 would be repurposed to instead move the arm about. Funny, and fully in tune with the ethos at the Stanford and MIT AI Labs at the time.

I cannot anywhere find either an online or offline copy of Paul’s 1972 Ph.D. thesis. It had numbers STAN-CS-311 and Stanford AIM-177 (for AI Memo)–if anyone can find it please let me know. [[See the comment from Bill Idsardi and Holly Yanco below. I downloaded and read the free version and have some updates based on that in my reply to Professor Yanco.]] So here I will rely on a later publication by Bob Bolles and Richard Paul from 1973, describing a program written in WAVE. It is variously called STAN-CS-396 or AIM-220 — the available scan from Stanford is quite low quality, rescued from a microfilm scan from years ago. I have retyped a fragment of a program to assemble a water pump that is in the document so that it is easier to read.

For anyone who has seen assembler language this looks familiar, with the way the label L1 is specified and the comments follow a semi-colon, with one instruction per line. The program control flow even uses the same instructions as PDP-10 assembly language, with AOJ for add one and jump, SKIPE and SKIPN for skip the next instruction if the register is zero, or not. The only difference I can see is that there is just a single implicit register for the AOJ instructions, and that the skip instructions refer to a location (23) where some force information is cached (perhaps I have this correct, perhaps not…). This program seems to be grasping a pin, then going to a hole, and then dithering around until it can successfully insert the pin in the hole. It is keeping a count of the number of attempts, though this code fragment does not use that count, and seems to be checking at the end whether it dropped the pin by accident (by opening the gripper and closing it just a little again to see if a force is felt as would be the case if the pin remains in the same place), though there is no code to act on a dropped pin. Elsewhere in the document it is explained how the coordinates P and T are trained, by moving the arm to the desired location and typing “HERE P” or “HERE T” — when the arms were not powered they could be moved around my hand.

People soon realized that a pun on computer assembly language was not powerful enough to do real work. By late 1974 Raphael Finkel, Russell Taylor, Robert Bolles, Richard Paul, and Jerome Feldman had developed a new language AL, for Assembly Language, an Algol-ish language based on SAIL (Stanford Artificial Intelligence Language) which ran on the lab’s PDP-10. Shahid Mujtaba and my officemate Ron Goldman continued to develop AL for a number of years, as seen in this manual. They along with Maria Gini and Pina Gini also worked on the POINTY system which made gathering coordinates like P and T in the example above much easier.

Meanwhile Victor Schienman had spent a year at the MIT AI Lab, where he had developed a new small force controlled six revolute joint arm called the Vicarm. He left one copy at MIT6 and returned to Stanford with a second copy.

I think it was in 1978 when he came to a robotics class that I was in at Stanford, carrying both his Vicarm and a case with a PDP-11 in it. He set them up on a table top and showed how he could program the Vicarm in a language based on Basic, a much simpler language than Algol/SAIL. He called the language VAL, for Victor’s AL. Also, remember that this was before the Apple II (and before anyone had even heard of Apple, founded just the year before) and before the PC–simply carrying in a computer and setting it up in front of the class was quite a party trick in itself!!

Also in 1978 Victor sold a version of the arm, about twice the size of the Vicarm, that came to be known as the PUMA (Programmable Universal Machine for Assembly) to Engelberger’s company Unimation. It became a big selling product using the language VAL. This was the first commercial robot that was all electric, and the first one with a programming language. Over the years various versions of this arm were sold that ranged in mass from 13Kg to 600Kg.

The following handful of years saw an explosion of programming languages, usually unique to each manufacturer, and usually based on an existing programming language. Pascal was a popular basis at the time. Here is a comprehensive survey from 1986 showing just how quickly things moved!

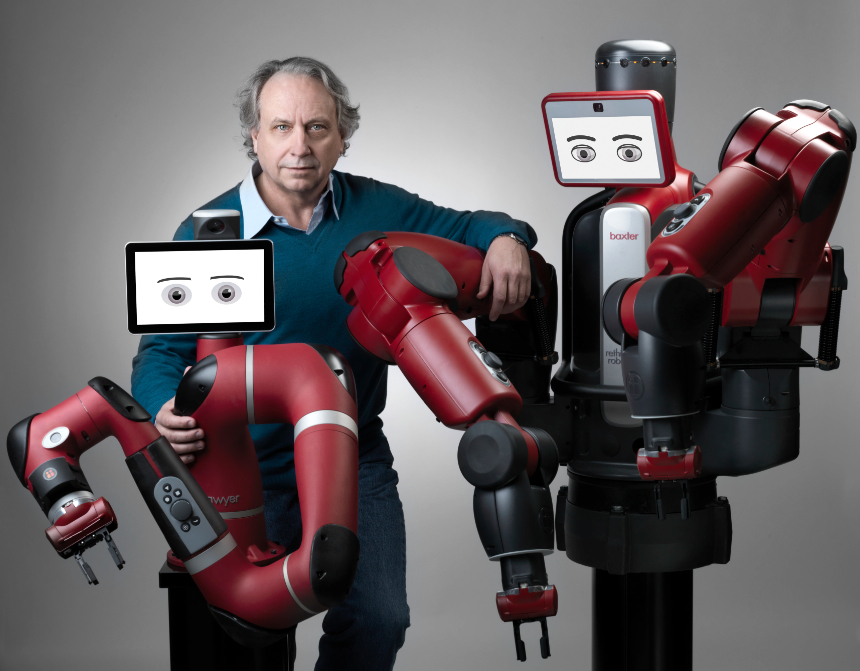

By 2008 I thought that it was time we tried to go beyond the stop gap measure of having programming languages for robot arms, and return to the original dream from around 1970 at the Stanford Artificial Intelligence Lab. I founded Rethink Robotics. We built and shipped many thousands of robot arms to be used in factories. The first was Baxter, and the second was Sawyer. In the linked video you can see Sawyer measuring the external forces applied to it, and trying to keep the resultant force to zero by complying with the force and moving. In this way the engineer in the video is able to effortlessly move the robot arm to anywhere she likes. This is a first step to being able to show the robot where things are in the environment, as in the “HERE P” or “HERE T” examples with WAVE, and later POINTY. Here is a photo of me with a Sawyer on the left and an older Baxter on the right.

Back in 1986 I had published a paper on the “subsumption architecture” (you can see the lab memo version of that paper here). Over time this control system for robots came to be known as the “behavior based approach” and in 1990 I wrote the Behavior Language which was used by many students in my lab, and at the company iRobot, which I had co-founded in 1990. In around 2000 an MIT student, Damian Isla, refactored my approach and developed what are now called Behavior Trees. Most video games are now written using Behavior Trees in what are the two most popular authoring platforms, Unity and Unreal.

For version five of our robot software platform at Rethink Robotics, called Intera, for interactive, we represented the “program” for the robot as a Behavior Tree. While it was possible to author a graphical behavior tree (there was no text version of a tree) using a graphical user interface, it was also possible to simply show the robot what you wanted it to do, and an AI system made inferences about what was intended, sometimes asked you to fill in some values using simple controls on the arm of the robot, and automatically generated a Behavior Tree. It was up to the human showing the robot the task whether they ever looked at or edited that tree. Here you can see Intera 5 running not only on our robot Sawyer, but also on the two other commercial robots at the time that had force sensing. When my old officemate and developer of AL, Ron Goldman, saw this system he proclaimed something like “it’s POINTY on steroids!”.

Baxter and Sawyer were the first safe robots that did not require a cage to keep humans away from them for the humans’ protection. And Sawyer was the first modern industrial robot which finally got away from having a computer like language to control it, as all robots had since the idea was first developed at the Stanford AI Lab back in the very early seventies.

There is still a lot remaining to be done.

1I had written a really quite abysmal master’s thesis in machine learning in 1977 in Adelaide, Australia. Somehow, through an enormous stroke of luck I was accepted as one of twenty PhD students in the entering class in Computer Science at Stanford University in 1977. I showed up for my first day of classes on September 26th, 1977, and that day I was also one of three new students (only three that whole year!!) to start as research assistants at the Stanford Artificial Intelligence Laboratory (SAIL). At that time the lab was high above the campus on Arastradero Road, in a wooden circular arc building known as the D. C. Power Building. It has since been replaced by a horse ranch. [And there never was an A. C. Power Building at Stanford…] It was an amazing place with email, and video games, and computer graphics, and a laser printer, and robots, and searchable news stories scraped continuously from the two wire services, and digital audio at every desk, and a vending machine that emailed you a monthly bill, and a network connected to about 10 computers elsewhere in the world, all in 1977. It was a time machine that only traveled forwards.

I was a graduate student at SAIL until June 1981, then a post-doc in the Computer Science Department at Carnegie Mellon University for the summer, and then I joined the MIT Artificial Intelligence Laboratory for two years. In September 1983 I was back at Stanford as a Computer Science faculty member, where I stayed for one year, before joining the faculty of the Electrical Engineering and Computer Science Department back at MIT, where I was again a member of the MIT Artificial Intelligence Laboratory. I became the third director of the lab in 1997, and in 2003 I became director of a new lab at MIT formed by joining the AI Lab and the Lab for Computer Science: CSAIL, the Computer Science and Artificial Intelligence Lab. It was then and remains now the largest single laboratory at MIT, now with well over 1,000 members. I stepped down as director in 2007, and remained at MIT until 2010.

All the while, beginning in 1984, I have started six AI and robotics companies in Boston and Palo Alto. Total time where I have not been part of any startups since 1984 is about six months.

2Sometimes I was their junior colleague, and at times I became their “manager” on org charts which bore no relation at all to what happened on the ground. I also met countless other well known people in the field at conferences or when I or they visited each other’s institutions and companies.

3I often used to sit next to Doug Ross at computer science faculty lunches at MIT on Thursdays throughout the eighties and nineties. We would chat amiably, but I must admit that at the time I did not know of his role in developing automation! What a callow youth I was.

4I met Joe Engelberger many times, but he never seemed to quite approve of my approach to robotics, so I can not say that we had a warm relationship. I regret that today, and I should have tried harder.

5Ken Olsen left MIT to found DEC in 1957, based on his invention of the minicomputer. DEC built a series of computers with names in sequence PDP-1, PDP-2, etc, for Programmed Data Processor. The PDP-6 and PDP-10 were 36 bit mainframes. The PDP-7 was an eighteen bit machine upon which UNIX and the C language were developed at Bell Labs. The PDP-8, a 12 bit machine, was cheap enough to bring computation into individual’s research labs, and the smaller successor the PDP-11 really became the workhorse small computer before the microprocessor was invented and developed. Ken lived in a small (by population size) town, Lincoln, on the route between Lexington and Concord that was taken on that fateful day back in 1775. I also lived in that town for many years and some Saturday mornings we would bump into each other at the checkout line of the town’s only grocery store, Donelan’s. Often Ken would be annoyed about something going on at MIT, and he never failed to explain to me his position on whatever it happened to be. I wish I had developed a strategy of asking him questions about the early days of DEC to divert his attention.

6For many years at MIT I took the Vicarm, that Victor had left behind, to class once a year when I would talk about arm kinematics. I used it as a visual prop for explaining forward kinematics, and the need for backward kinematics. I never once thought to take a photograph of it. Drat!!!

Richard Paul’s 1972 Stanford University dissertation, MODELLING, TRAJECTORY CALCULATION AND SERVOING OF A COMPUTER CONTROLLED ARM:

https://search.proquest.com/pqdtglobal/docview/302681702/33D225F344F54449PQ

Thanks Bill! For those of you who might be interested they are charging $41 to download the PhD thesis. I’m pretty sure that Stanford would hold the copyright.

A free version: https://apps.dtic.mil/sti/pdfs/AD0785071.pdf

Thanks Holly! I’m glad I didn’t pay for the version last night.

There is so much richness in the dissertation. Some quick observations.

1. It is amazing how short, in terms of textual words, that Ph.D. dissertations were at the time, and how much more fresh ground could be covered in so few words.

2. The dissertation came with a playable movie embedded in it. Printed accurately (probably doesn’t work if one prints this scanned copy) on single sided paper, there is a flip movie, in the upper right corner of each page, of the Stanford Arm doing a task. The “URDF” model looks to be done in Bruce Baumgart’s “winged-edged polyhedra” modelling system. That was written in SAIL. [In 1981 when I first got to MIT I reimplemented that representation on a CADR Lisp Machine, but I used a by then more modern hidden line algorithm for rendering. It was actually a hidden surface renderer and if I had had any display that was more than single bit black and white I could have rendered greyscale or color surfaces. The best I could do at the time was by taking advantage of the fast processor and using an at the time ridiculous number of faces for cylinder approximations I was able to get a sheeny sort of surface for cylinders, rather than the chunky polyhedral approximation Paul had to use.]

3. The SKIPE and SKIPN instructions in the example I included are referring, with argument 23, to a location which is a flag on whether the “arm failed to stop on specified force”. I can’t tell quite tell the logic of this failure, and how it relates to whether a 0 or a 1 is put in the location (see page 68 of the document). They are looking at the result of the previous instruction, “CHANGE [0 0 -1] 0.6” in both cases which seems to be move in the the -Z direction (most likely vertically downwards) with a maximum speed of 0.6 (also page 68). The AOJ instruction is not documented in the dissertation, and I am guessing it was added in the following year, and implicitly updated a new shared variable like those described on page 68.

4. It appears that there was a servo loop running at 60Hz on the PDP-6! Now this requires some knowledge of the hacked main frame at SAIL. Up to 32 users on “data disc” terminals (there were 64 of them but only 32 could be used at once), 4 users on enormous vector graphics terminals, and some number of dial in users (on split speed 1200/150 modems) could be using the PDP-10 at once. Servo loops on that machine would have been wildly choppy and unusable on the robot. However, before the PDP-10, the lab had used a PDP-6, which had the same instruction set as the later machine, but was much slower. Rather than throw it out, the PDP-10 was hardware modified to use the PDP-6 as its front end processor, via shared memory. As I understand it the PDP-6 ran real time loops for the datadisc–a digital disk that stored the 32 images of the screens of users, which then had 32 takeoffs in black and white NTSC that were switched through a MUX with 64 outputs that went off to the 64 physical terminals around the lab, via thick coax cables. There were also four TV tuners that fed signals on the input side, so you could watch TV on your green phosphorescence screen if you wanted to, and the sound came digitally to a special speaker at your desk which was there for CCRMA, the Center for Computer Research into Music and Acoustics, which shared the SAIL computer for FM synthesis of music. Offices had no locks on them and between 5am and 9am composers would descend on the lab and use the computer to do music composition. The four vector terminals could also display music notation. Possibly the PDP-6 also handled all the keystrokes on all the special purpose keyboards (which had a 12 bit (??) parallel interface via other cables to the computer). But back to robots! It looks like the PDP-6 was also used by Paul to do the real-time servos.

5. Paul used an additional unique feature of the SAIL PDP-10, the LEAP associative memory. The link given by Bill Idsardi above includes a preview feature that shows that the $41 version of the download includes the signature page, which this version does not. Jerry Feldman was Paul’s principal advisor (and his readers were John McCarthy and Bernie Roth). Jerry had had the PDP-10 modified so that the last 4K of 36 bit words in the 256K address space formed an associative memory. All users of the machine had their upper 4K mapped to the same piece of physical memory so using it was a little tricky in practice. The SAIL language gave access to this memory via the LEAP system which used a special syntax based on the rather wild and unique character set that was used at SAIL. I remember that a multiply with a circle around it was one of the characters used, along with others that I don’t remember. In any case each 36 bit word had three 12 bit fields, and you could access triples stored in the 4K of memory in an associative way by instantiating some of these fields–this was content based memory reference. In his dissertation Paul says that he used this memory to place things so that other high level planners would be able to have access to the lower levels of the arm controllers. He doesn’t say whether anyone used this in practice, but that was clearly the long term intent.

I have a paper copy of his thesis in one of the many boxes in my library. If I ever find it again I’ll post it.

The “paper video” in the corner was amazing.

Rodney,

I worked at Unimation when Vic Scheinman came to demonstrate his robot to myself, Maury Dunne, and Bill Perzley.

Vic set up the robot and the computer. The robot started, typed some words, and typed a command to stop itself. I took an 8mm video of his visit. I sent it to him about a year before he died. (I wonder who has it now).

Vic’s arm failed while he was there. One of the motors died. He sent me out for some wire. Then he built a new motor from scratch on the Bridgeport in our lab. I was completely impressed.

Vic was later hired to design the Puma. His desk was next to mine.

I got the VAL language from Vic and Bruce Shimano. I modified and extended it to drive our Unimate from a PDP-11/03. It also drove the 2 arm robot for Ford.

Richard (Lou) Paul was at SRI using a Unimate. He taught myself and Bill Perzley how to use DH-Matrices and the Jacobian for robots. I extended VAL to use some of that math.

Later, at IBM Research (with Russ Taylor and others), we worked on AML, the robot used to drive the IBM robot. I worked on the vision subsystem (Gold Filling) and a multi-address space linking loader. I also added networking to link it to the Boca robot. Ralph Hollis left the group and all of that robot work went with him to Carnegie Mellon. I worked at CMU and visited Ralph often.

I researched a “design-to-buid system”. The idea is to start with a CAD drawing of an assembly and automatically construct a robot plan to build it. This was used to assemble a flashlight, driven from AML.

Later still, I worked with Scott Fahlman on Robot-Human cooperation to change a car tire. We used a mashup of Scone (Scott’s Knowledge base), a modified version of Forgy’s RETE algorithm for rules, a modified form of Alice’s natural language, machine learning (to recognize lug nuts), and a custom feedback system to dynamically learn new rules and knowledge from interacting with the human.

These days all of my robot work is in ROS.

Tim

Wow Tim, that is quite a story! You were really in the thick of it, and your technical work was part of making VAL a commercial reality.

Do you still have the original 8mm film, or a digital version of it? Can you post it to youtube?

The three of you made a very good decision in deciding that Unimation would work with Vic. The PUMA are a really big impact on the world. Thank you!

And the story about Vic rebuilding the motor by hand while visiting is priceless!

I sent the 8mm film to Victor about a year before he died. He said he would send me a DVD version but never did. I don’t know any of his relatives so I can’t find who might have the film.

I still have some of the Unimation robot documentation. Do you know of a good museum that might want it?

I also have a displaced center insertion tool that was developed at MIT. It is based on the idea that it is better to “pull” something into a hole rather than push it, so the “center” is displaced to appear to be in front of the peg.

Methinks I also have one of the original Unimate encoders.

I wish I’d kept the 8mm video of Vic’s visit. Perhaps if you know some of his relatives they might have saved it. Vic promised me he would copy it to DVD but he never did.

Tim

Freddy can be seen in operation in the following video of the televised debate on the Lighthill report:

https://youtu.be/03p2CADwGF8?t=1661

I’ve linked the video to just before the start of Donald Michie’s presentation of Freddy. Michie was the PhD advisor of Stephen Muggleton who is my PhD advisor. Stephen has told me that the Lighthill Report, besides almost killing AI research as a whole, nipped in the bud the nascent field of robotics research in the UK and is the main reason why today the UK has no robotics research to speak of.

I didn’t know that Freddy could only operate on its wooden-blocks world (I know next to nothing about Freddy!). On the other hand, it seems to have some early machine vision capabilities. I wonder if presenting the Stanford Arms would have made any difference to the debate, although of course the debate was just for show and irrelevant given the damning report that Sir James Lighthill had already written. Ironically, of all AI research at the time, Lighthill praised only SHRDLU – a virtual robot arm moving virtual blocks around a virtual world, guided by a natural-language dialogue with its operator. So natural English was one of the early “robot arm programming languages”, although it doesn’t seem to be used for that task today.

Thanks Stassa! That is great.

Thanks for these fascinating recollections, Dr. Brooks!

Good stuff!

Those weren’t the first electric arms. There were some notable earlier ones, based on teleoperators for e.g. nuclear handling, retrofitted with electric motors.

One such arm, and the programming language, was from Heinrich Ernst’s 1961 PhD thesis. Way ahead of his time! There is a very nice video on the web somewhere. I downloaded a copy but I don’t have the URL.

I also have a clip from a History Channel program. A very nice 7 minute segment on Unimation including some conversation with George Devol.

Another collection I don’t have the URL for — MIT has a repository of a couple dozen videos from the old days, named things like 07-arm.mp4. Lots of stuff — a lecture by Minsky, for example. But notably including videos from the old Copy Demo.

And, maybe we are focused on arms here, but otherwise I think Shakey should be included. It was a robotic manipulator, even if it wasn’t an arm. Ralph Hollis’s Newt, as well.

I’m hoping somebody might post URL’s for the above. If not I can upload them somewhere.

Thanks Matt, lots of good stuff from you here.

A. I’ll start with Shakey. I did not include Shakey, even though it manipulated boxes by pushing them around, as it did not use a “computer language” to program that task. This essay was about the origin of the use of computer languages for industrial robot arms, and there just was not such language with Shakey.

Instead Shakey tried, and succeeded, at doing the whole AI approach to getting Shakey to translate a task specification, in the form of a goal state of the world, into a series of motions that succeeded at satisfying the goal given what it could sense about the world. Paul gave up on this, probably intending to solve it later, and instead looked directly at how to specify the motions using a language he made up for the problem, WAVE.

I always think of there being a mismatch between “computation” whether explicitly programmed or coming from an AI planner, and the real world, which needs to be absorbed by some sort of “impedance matcher”. Your later work with Tomas Lozano-Perez and Russ Taylor did this by setting things up such that physics of a compliant motion were exactly what was need to get to a local goal state. You used physics to replace computation. Paul and Bolles used computation for a search, with physics of a force limited motion to get the impedance match. Shakey used its LLAs (low level actions) which in the early days no one talked about, to get a little finite state machine to do that work, without relying on any “slop” in physics. My subsumption architecture (and the behavior trees that they begat) made those states in the the FSMs be the primary representation of the “impedance matcher” (I mean a very conceptual form of impedance here).

B. What I did miss in my essay is Heinrich Ernst’s work, described in this 1961 dissertation. I had not ever seen the MHI language, nor even the THI language (see below for both), which both ran his MH-1, or Mechanical Hand 1. Here is where to download his dissertation:

https://dspace.mit.edu/bitstream/handle/1721.1/15735/09275630-MIT.pdf

and here is the movie of the system operating:

https://www.youtube.com/watch?v=hMaaHI2s-4M

Richard Paul’s dissertation references Ernst’s, though in my defense I had not been able to find a copy of Paul’s dissertation until Holly Yanco sent me a link after I had written the essay above.

Now, having quickly read the dissertation here is some commentary.

First thing I note is that Claude Shannon was his Ph.D. advisor. (Marvin Minsky was also on his committee.) Many people think of Shannon as the information theory guy, or even the Boolean logic of circuit design guy. And he was those two guys. But as I have been digging through the earliest history of AI for the book I am working on (Dan Dennett just sent me email yesterday, saying “Ah, it is your juice book!” — he is right) I find Shannon in more and more places on the origins of AI proper. We’ve all known about his mechanical mouse for maze solving but this is the first time that I have seen him connected to robotics proper, and right at the start of it of course.

Indeed he did use an electric arm. It was based on a purchased “American Machine and Foundry Servo Manipulator”. From searching for that term it looks like there was a reference pair of dual arms that a person would move around, and they somehow servo controlled a pair of actuated arms (each 6DOF with a single parallel jaw gripper) at a remote location. It looks like Ernst used a single such remote arm. He says he put an electric motor and potentiometer on each DOF, so I can’t tell what the original arm used, and whether it was hydraulically actuated or not. But in any case he did turn the arm into an electrically controlled arm, and then built a custom controller with 150 transistors, 300 diodes, and 32 vacuum tubes. As far as I can tell this was an analog control unit with set points sent to it by the computer. So it was not digitally servoed as Scheinman’s Stanford Arms were later in the decade.

The computer that controlled it was the TX-0 at Lincoln Labs, MIT, a transistorized machine with 18 bit words in magnetic core memory. Ernst says that it did not have a multiply instruction. DEC, digital equipment corporation, later made a “cleaned up” version of the TX-0 as its PDP-1 computer.

Ernst goes to some length to diss Danny Bobrow’s attempt to analyze robot motion using coordinate transforms, which was certainly how the arms of Scheinman and thereafter were controlled. Paul’s thesis talks about this at length.

Looking at the movie it seems that Ernst was able to take advantage of the kinematics of the American Machine and Foundry arm, and to get away without coordinate transforms for the particular tasks he was interested in doing. A very long link points down towards the table, like a pendulum, and then the hand is on a rotary joint whose axis is parallel to the table. Side to side motion is done by swinging the long link but the hand does not rise from the table surface by very much. The whole link is then vertically dropped until the hand is in contact with the table. Page 31 of the dissertation shows some touch sensors which must be in contact with the table at this point. I can’t tell whether Enrst then servos the hand so that both fingers are in touch with the table (there may not be enough sensors on the right finger for that, can’t tell) or sets the joint to be passive and uses downward pressure to make the fingers once again at the same height about the table. Now he lifts the whole arm just a little bit and the fingers are parallel to the table top again, and a small height above the table, so that locally they can be skimmed around looking for a target object with some of the other touch sensors on the fingers.

His solution was not general and probably didn’t require multiplication. Bobrow’s solution was general and certainly did require multiplication.

Then Ernst describes (in chapter 7) his MHI — the Mechanical Hand Interpreter. This is a robot specific computer language, for the whole arm, plus the hand. It is interpreted, which at the time would have been very novel. He argues that it is OK to interpret the language rather than compile it as he calculates that the amount of computation for a constant cost is coming down by a factor of two every year.

The language looks like a computer language, sort of. It seems that it is really about writing small finite state machines, one for each specified motion, though the lines of code do not correspond to the states (as in Shakey, or subsumption). Rather the state bits (f1, f2, in the only example in this chapter, given on page 35 of the dissertation) are determined by a set of “until” conditions whose order in the source code make them set bits f1, f2, etc. It looks like some checks (“ifgoto” and “ifcontinue”) are run at regular intervals and they may determine that we should go onto another motion, or to continue the current motion. So it is not a conventional declarative computer language in the way that WAVE, AL, and VAL, all were — the timing and parallelness aspects are much more smeared over multiple statements.

A second example of an MHI program in chapter 8 has more than one of these finite state machines, and confirms my interpretation of switching between them, but there are also some other statements with unexplained semantics, so this is not the whole story.

Ernst then goes on (in chapter 8) to describe a higher level language THI, or Teleological Hand Interpreter. Here the idea is that instead of specifying motions and a finite state machine, one specifies goals, though in the context of a motion. I find the examples confusing, but it looks like he is trying to have single lines of code for a whole finite state machine, and perhaps is using template to generate something like an MHI program.

In any case, he reports that there is no improvement in success rate over a fully hand written program, as THI really doesn’t know what to do when the world is different than expected.

Ernst was born in Switzerland in 1933 and buried there in 2005. I can not find anything else about him, beyond this amazing Sc.D. dissertation.

That Ernst video is the same one I have. I love the part where he has his feet up, smoking a pipe, to make the point the robot is doing the work while the human relaxes.

But I think the most interesting thing is that the system depends so much on directly mapping from sensor signals to actions. He seemed to want to minimize use of models, use of state. Anticipating some of your work?

Somebody told me he was last seen on a yacht in the Mediterranean, but that somebody gave me no source. It was just a rumor, and now I cannot even remember who told me.

Rodney,

The Gold Arm. ” It has five revolute joints and one linear axis.” I see two linear axis, isn’t the gripper linear too?

Love this sort of stuff, thank you!

Typically the gripper is not counted in the DOFs of an arm. The things referred to as arm DOFs are those that let it place the gripper in a position and orientation. The Gold Arm has 5 revolute joints and one linear joint before getting to the gripper. There is a workspace where the gripper can be at an position and orientation (six degrees of freedom).