There are three things that the existence of LLMs, such as ChatGPT-3.5 and ChatGPT-4 make us have to rethink. At different times and amongst different communities they have all had lots of AI researchers talking about them, often with much passion.

Here are three things to note:

- The Turing Test has evaporated.

- Searle’s Chinese Room showed up, uninvited.

- Chomsky’s Universal Grammar needs some bolstering if it is to survive.

We’ll talk about each in turn.

The Turing Test

In a 1950 paper titled Computing Machinery and Intelligence, Alan Turing used a test which involved a human deciding whether an entity that the person was texting with was a human or a computer. Of course, he did not use the term “texting” as that had not yet been invented, rather he suggested that the communication was via a “teleprinter”, which did exist at the time, where the words typed in one location appeared on paper in a remote location. “Texting” is the modern equivalent.

Turing used this setup as rhetorical device to argue that if you could not accurately and reliably decide whether it was a person or a computer at the other end of the line then you had to grant that a machine could be intelligent. His point was that it was not just simulating intelligence but that it would actually be intelligent if people could not tell the difference.

Turing said;

I believe that in about fifty years’ time it will be possible to programme computers, with a storage capacity of about 109, to make them play the imitation game so well that an average interrogator will not have more than 70 per cent, chance of making the right identification after five minutes of questioning.

His number 109 referred to how many bits of program would be needed to achieve this result, which is 125MB, i.e., 125 Mega Bytes. Compare this with ChatGPT-3.5 which has 700GB, or 700 Giga Bytes, of weights (175 billion 32 bit weights) that it has learned, which is almost 6,000 times as much.

His paragraph above continues:

The original question, ‘Can machines think!’ I believe to be too meaningless to deserve discussion. Nevertheless I believe that at the end of the century the use of words and general educated opinion will have altered so much that one will be able to speak of machines thinking without expecting to be contradicted.

Despite his goal to be purely a rhetorical device to make the question, ‘Can machines think!’ (I assume the punctuation was a typo and was intended to be ‘?’) meaningless, this led to people calling the machine/person discernment test the Turing Test, and it became the default way of thinking about how to determine when general Artificial Intelligence had been achieved. But, of course, it is not that simple. That didn’t stop annual Turing Tests being set up, with entrants from mostly amateur researchers, who had built chat bots designed not to do any useful work in the world, but designed and built simply to try to pass the Turing Test. It was a bit of a circus and mostly not very useful.

Earlier this year I felt like I was not hearing about the Turing Test with regards to all the ChatGPTs, and in fact the scientific press had noticed this too, with this story in Nature in July of this year:

Don’t worry, there are still papers being written on the Turing Test and ChatGPT, for instance this one from October 2023, but the fervor of declaring that it is important has decreased.

We evaluated GPT-4 in a public online Turing Test. The best-performing GPT-4 prompt passed in 41% of games, outperforming baselines set by ELIZA (27%) and GPT-3.5 (14%), but falling short of chance and the baseline set by human participants (63%).

In general the press has moved away from the Turing Test. ChatGPT seems to have the sort of language expertise that people imagined some system as intelligent as a person would have, but it has become clear that it is not the crystalline indication of intelligence that Turing was trying to elucidate.

SEARLE’S CHINESE ROOM

In 1980, John Searle, a UC Berkeley philosopher, introduced the idea of a “Chinese Room”, as a way to argue that computers could not be truly intelligent in the way that people are, not truly engaged with the world in the way people are, and not truly sentient in the way people are.

He chose “Chinese” as the language for the room as it was something totally foreign to most people working in Artificial Intelligence in the US at the time. Furthermore its written form was in atomic symbols.

Here is what ChatGPT-3.5 said when I asked it to describe Searle’s Chinese Room. I have highlighted the last clause in blue.

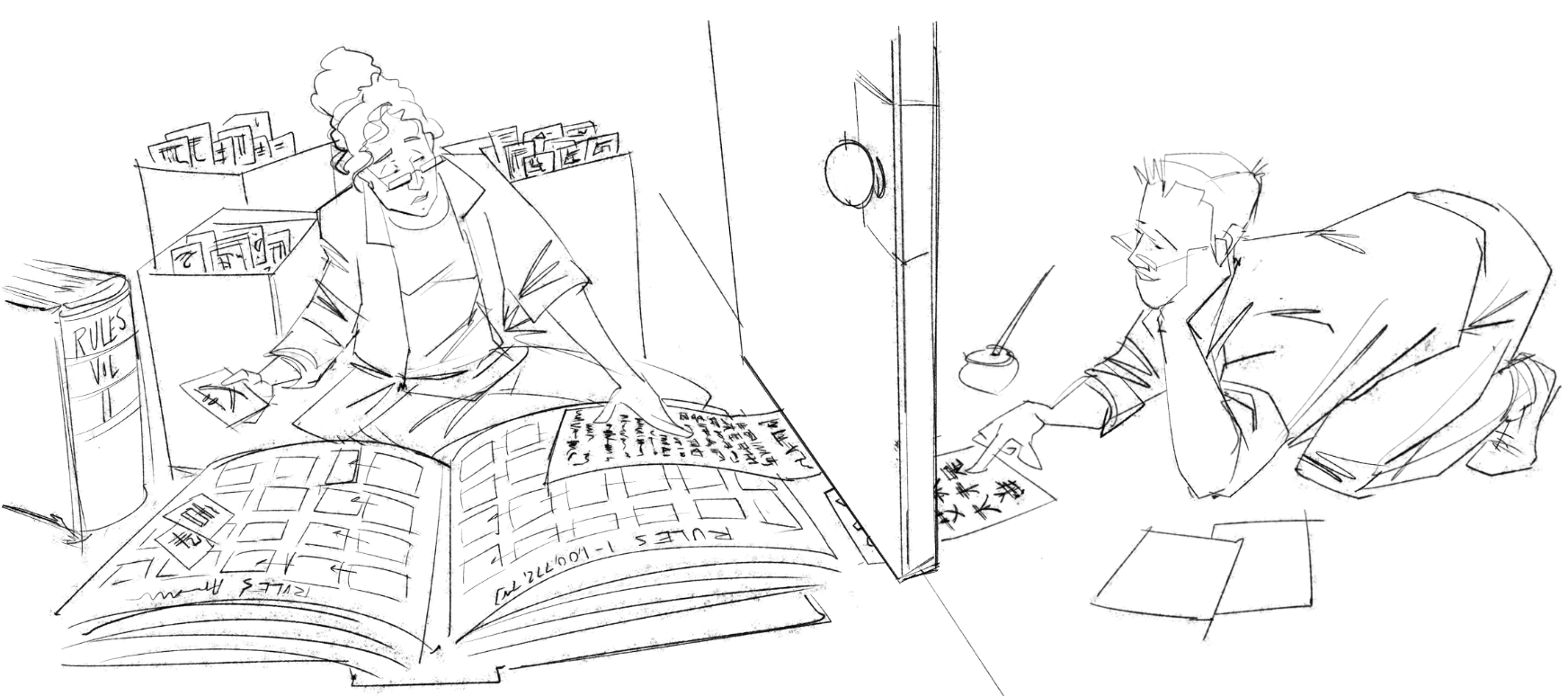

Imagine a person who doesn’t understand the Chinese language locked inside a room. This person has a set of instructions (a program) written in English that tells them how to manipulate Chinese symbols based on the symbols that are input to the room through a slot. They have no understanding of Chinese and don’t know the meanings of the symbols.

From the outside, someone passes messages written in Chinese through the slot into the room. The person inside the room follows the instructions in the program and produces responses in Chinese based solely on symbol manipulation, without understanding the content or meaning of the messages.

To an observer outside the room, it may appear as though the person inside understands Chinese, as they can generate coherent responses in Chinese. However, the person inside the room doesn’t actually understand the language. They are merely following a set of syntactic rules or algorithms for manipulating symbols, without grasping the semantics or meanings of those symbols.

To prove that ChatGPT really is the Chinese Room, I typed in some Chinese text to version 3.5 and it responded with Chinese text:

艾未未是谁?

艾未未是一位知名的中国艺术家、社会活动家和评论家。他以其大胆的艺术创作和公开对政府审查和人权侵犯的批评而闻名。艾未未的作品涉及雕塑、摄影、行为艺术、装置艺术和社交媒体等多种媒介,展现了对政治、社会和人权议题的关注。他因批评中国政府而多次引起争议,这导致了他与政府当局的冲突,并且在过去曾经被拘留和监禁。他因其独特的艺术作品和公开表达的观点而在国际艺术界和社会活动家圈中备受瞩目。

My question was “Who is Ai Wei Wei?” and it responded in what people tell me is perfectly good Chinese, describing the Chinese artist Ai Wei Wei, his art, and his run ins with the government.

So here we have Searle’s Chinese Room. It has been trained on billions of words from the web but has no experience of the world, has never heard Chinese spoken, and has never even read Chinese characters as characters but rather has seen them only as integers along with (I assume) a pre-processing step to map whichever of the five (Unicode, GB, GBK, Big 5, and CNS) common digital Chinese character code standards each document uses to that common set of integers. (The fact that ChatGPT `knows’, in its description of the room, above, that Chinese is written in symbols is not because it has ever seen them, but because it has “read” that Chinese uses symbols.)

The fact that GPT is the Chinese Room, the fact that one now exists, means that many of the old arguments for and against Searle’s position that he was staking out with his rhetorical version of the room must now be faced squarely and perhaps re-evaluated. Searle’s Chinese Room was a topic of discussion in AI for well over 25 years. Everyone had to have an opinion or argument.

In my book Flesh and Machines: How Robots Will Change Us (Pantheon, New York, 2002), I made two arguments that were in opposition to Searle’s description of what his room tells us.

Firstly, I argued (as did many, many others) that indeed Searle was right that the person in the room could not be said to understand Chinese. Instead we argued that it was the whole system, the person, the rule books, and the state maintained in following the rules that was what understood Chinese. Searle was using the person as a stand in for a computer fresh off the production line, and ignoring the impact of loading the right program and data on to it. In the ChatGPT case it is the computer, plus the algorithms for evaluating linear neuron models plus the 175 billion weights that are together what make ChatGPT-3.5 understand Chinese, if one accepts that it does. In my book I said that no individual neuron in a human brain can be said to understand Chinese, it has to be the total system’s understanding that we talk about. ChatGPT-3.5 is an example of a computer doing the sort of thing that Searle was arguing was not possible, or at least should not be spoken about in the same way that we might speak about a person understanding Chinese.

Secondly, I argued (using Searle as the person in the room as he sometimes did):

Of course, as with many thought experiments, the Chinese room is ludicrous in practice. There would be such a large set of rules, and so many of them would need to be followed in detailed order that Searle would need to spend many tens of years slavishly following the rules, and jotting down notes on an enormous supply of paper. The system, Searle and the rules, would run as a program so slowly that it, the system, could not be engaged in any normal sorts of perceptual activity. At that point it does get hard to effectively believe that the system understands Chinese for any usual understanding of `understand’. But precisely because it is such a ludicrous example, slowed down by factors of billions, any conclusions from that inadequacy can not be carried over to making conclusions about whether a computer program running the same program `understands’ Chinese.

Hmm, well my bluff has been call by the existence of ChatGPT. First, note that I was right about the size of the rule set, 175 billion neural weights, that it would take a person effectively forever to follow them. But every modern laptop can hold all those rules in the file system (it is less than a terabyte of memory), and the algorithm is parallel enough that a chunk of processing in the cloud can make ChatGPT run at human language speeds.

If I maintain my above argument from 2002, I would have to say that ChatGPT does `understand’ Chinese. But those who have read my writings over the years would guess, rightly, that I don’t think it does. Without grounding in physical reality I don’t think a machine can understand in the same way we do. ChatGPT is just like someone following rules with no semantic understanding of the symbols, but it does it at the speed my argument above said was necessary for it to really be understanding. But now I’m going to say it still doesn’t understand. My old self and my today self are not being intellectually coherent, so I am going to have to think about this some more over the next few years and refine, perhaps rethink, but certainly change in some way what it is I conclude from both Searle and ChatGPT existing.

Other people over the last forty years have argued, and I have agreed, that language in humans is strongly grounded in non-language. So, we have argued that a computer program, like ChatGPT-3.5 could not have a consistent performance level that would seem like human language. ChatGPT-3.5 certainly seems to have such consistent performance, as long as you don’t poke it too deep–it certainly has a level that would work for most of your daily interactions with strangers. Our arguments are therefore challenged or broken. I don’t yet know how to fix them.

CHOMSKY’S UNIVERSAL GRAMMAR

Nom Chomsky is the most influential linguist ever and he spent most of his career in MIT’s linguistics department. His advocacy of the existence of a universal grammar system in humans, and humans only, has shaped much cognitive science and theories about cognition in both humans and non-humans.

I asked ChatGPT to “explain Chomsky’s universal grammar”, and it replied:

ChatGPT is freeriding on the grammar rules that humans have applied when creating the text it has (been) trained on. In that vast data, a noun will most often be followed by a verb, etc. Therefore, spitting out the next statistically most frequently following word is likely to be consistent with the grammar rules of the relevant language.

Good observation on the Turing Test. Most people don’t get that it was never intended to be definitive. Anyway, we have a recent paper saying that ChatGPT flunked.

You are also 100% correct on the Chinese Room. I used to think it was a silly idea. Now I pay $20/month to talk to it. Score one for Searle!

I don’t think there is any saving the universal grammar. ChatGPT shows that it’s relationships that count, not how you express them.

Good article. Thank you!

Regarding Chinese room, the main question is not about following a rule book, but learning a rule book. Chatgpt has demonstrated that it learned the rule book although it is crappy and many billion nuances and visual experiences are needed to correct its bad rule book. I would say until it has not learned those and a human like rule book as you said i.e fully experiential, visual, embodied way, ChatGPT has learned Chinese but crappy 0.000000…..1 version of a human learned Chinese …….

Also thanks for posting this, these days i am also thinking about ways to detect whether an LLM is really intelligent. Somehow conscious experience keeps coming into all my investigations, it looks like a real intelligence like humans also needs a human like consciousness and autonomy. Otherwise as a thought experiment we can make the whole universe into a big computer and train it to compress a bigger universe giving it each particle and state of the particles, that will be the ultimate LLM but what questions would we ask to check whether it is intelligent );

The way Chomsky himself explains Universal Grammar (UG) in this youtube video (https://youtu.be/VdszZJMbBIU?si=gpfzvdlro1hoqnEY&t=1495), in machine learning terms UG is some kind of inductive bias (in Tom Mitchel’s sense) that humans have and that lets us identify the grammar of a natural language. Something that’s impossible to do only from examples, computationally speaking, as we know from Mark E. Gold’s 1964 work “Language identification in the limit” (Chomsky I believe used Gold’s result to support his poverty of the stimulus argument).

If that’s right (I’m no linguist so I may be wrong on the premises) then it’s obvious that Transformer-trained LLMs do not have a UG, or at least not the human UG. That’s because in Transformers, as in all neural nets, the inductive bias is encoded in their architecture and I don’t think anyone claims that Transformer architectures are somehow an encoding of human UG.

Maybe Transformer-trained LLMs learn something like human UG during training? I can imagine arguments both for and against that. If LLMs don’t learn something like human UG then where does their undeniable performance on language generation come from? To me it’s obvious that it comes from their training corpus of text.

Instead, Ilya Sutskever (chief scientist at OpenAI) and Dave Brockman (co-founder of OpenAI) have claimed, at various times (e.g. https://twitter.com/gdb/status/1731377341920919552), that for a system to learn to predict the next word in a sentence it must learn something about the process that generates language, i.e. human linguistic ability (or all of intelligence- a claim so extravagant and bereft of any supporting evidence it merits no serious consideration). We know that’s not true because the performance of LLMs increases only as the amount of training data, and the number of model parameters, increase. That means LLMs don’t need to learn anything about human linguistic ability, it suffices for them to model the surface statistical regularities of their training data, which is far easier. We’ll know that LLMs’ linguistic ability is improving when we see the data and parameters go down without hurting performance on language generation tasks. That’d be a good time to make a run for the terminator-proof bunker, btw.

Does that mean that intelligence is not necessary to learn language, or that a strong inductive bias like UG is not necessary? The problem is that we don’t know how much of human language LLMs can really generate so we can’t say if they really-really learn all of human language, from examples and without UG, instead of only some finite subset of it. That is, we don’t really know much about LLMs’ linguistic ability, only their performance on various benchmarks. We don’t even really know the limits of human linguistic ability! But we can say something about the limits of LLM linguistic ability.

You pointed out that humans learn language without anywhere near the amount of training data of LLMs. Not only that, but we also create new languages, spontaneously, like e.g. the children of parents speaking a pidgin, which is an incomplete language, create their own creoles, which are complete languages (https://en.wikipedia.org/wiki/Creole_language). And when humans first used language (assuming no space monoliths and the like) there was not even the concept of a human language to learn from, let alone a world wide web of texts. It seems what’s hard is to stop humans from developing language.

I guess that makes for a testable hypothesis: can a neural net develop language without being trained on examples of language? If I train a Convolutional Neural Net on pictures of cars, will it spontaneously break into poetry when I hand it a picture of a glorious sunset, the sea dark as wine, for it to classify? Maybe I didn’t need to blow my fortune on that bunker after all.