On January 1st, 2018, I made predictions about self driving cars, Artificial Intelligence and machine learning, and about progress in the space industry. Those predictions had dates attached to them for 32 years up through January 1st, 2050.

I made my predictions because at the time I saw an immense amount of hype about these three topics, and the general press and public drawing conclusions about all sorts of things they feared (e.g., truck driving jobs about to disappear, all manual labor of humans about to disappear) or desired (e.g., safe roads about to come into existence, a safe haven for humans on Mars about to start developing) being imminent. My predictions, with dates attached to them, were meant to slow down those expectations, and inject some reality into what I saw as irrational exuberance.

I was accused of being a pessimist, but I viewed what I was saying as being a realist. Out of emotion I wanted to give some other predictions that went against the status quo expectations at the time. Fortunately I was able to be rational and so I only made one prediction that I view in retrospect as being hot headed (and I ‘fess up to it in the AI&ML table in this post). Any one can, and they do, make predictions. But usually they are not held to those predictions. So I am holding myself accountable to my own predictions.

As part of self certifying the seriousness of my predictions I promised to review them, as made on January 1st, 2018, every following January 1st for 32 years, the span of the predictions, to see how accurate they were. This is my third annual review and self appraisal, following those of 2019 and 2020. Only 29 to go!

I think in the three years since my predictions, there has been a general acceptance that certain things are not as imminent or as inevitable as the majority believed just then. So some of my predictions now look more like “of course”, rather than “really, that long in the future?” as they did in 2018.

This is a boring update. Despite lots of hoopla in the press about self driving cars, Artificial Intelligence and machine learning, and the space industry, this last year, 2020, was not actually a year of any surprises.

The biggest news of all is that SpaceX launched humans into orbit twice in 2020. It was later than they had predicted, but within my predicted timeframe. Neither they nor I are surprised or shocked at their accomplishment, but it is a big deal. It changes the future space game for the US, Canada, Japan, and Europe (including the UK…). Australia and New Zealand too. It gives countries in Asia, South America and Africa more options on how they plot their space ambitions. My heartfelt congratulations to Elon and Gwynne and the whole gang at SpaceX.

This year’s summary indicates that so far none of my predictions (except for the one hot headed one I mentioned above) have turned out to be too pessimistic. As I said last year, overall I am getting worried that I was perhaps too optimistic, and had bought into the hype too much.

Some might claim that 2020 was special because of Covid, and that any previous predictions, made by others, more optimistic than I, should not count, because something unforeseen happened. No, I will not let you play that game. The reason that predictions turn out to be overly optimistic is because the people doing the predictions underestimated the uncertainties in bringing any technology to fruition. If we allow them to claim Covid got in the way, we should allow them to claim that something was harder than they expected and that got in the way, or the market didn’t like their product and that got in the way. Etc. My predictions are precisely about the unexpected having bigger impact than other people making predictions expect. Covid is just one of a long line of things people do not expect.

Repeat of 2019’s Explanation of Annotations

As I said in 2018, I am not going to edit my original post, linked above, at all, even though I see there are a few typos still lurking in it. Instead I have copied the three tables of predictions below from 2020’s update post, and have simply added a total of eight comments to the fourth columns of the three tables. As with last year I have highlighted dates in column two where the time they refer to has arrived.

I tag each comment in the fourth column with a cyan colored date tag in the form yyyymmdd such as 20190603 for June 3rd, 2019.

The entries that I put in the second column of each table, titled “Date” in each case, back on January 1st of 2018, have the following forms:

NIML meaning “Not In My Lifetime, i.e., not until beyond December 31st, 2049, the last day of the first half of the 21st century.

NET some date, meaning “No Earlier Than” that date.

BY some date, meaning “By” that date.

Sometimes I gave both a NET and a BY for a single prediction, establishing a window in which I believe it will happen.

For now I am coloring those statements when it can be determined already whether I was correct or not.

I have started using LawnGreen (#7cfc00) for those predictions which were entirely accurate. For instance a BY 2018 can be colored green if the predicted thing did happen in 2018, as can a NET 2019 if it did not happen in 2018 or earlier. There are five predictions now colored green, the same ones as last year, with no new ones in January 2020.

I will color dates Tomato (#ff6347) if I was too pessimistic about them. No Tomato dates yet. But if something happens that I said NIML, for instance, then it would go Tomato, or if in 2020 something already had happened that I said NET 2021, then that too would have gone Tomato.

If I was too optimistic about something, e.g., if I had said BY 2018, and it hadn’t yet happened, then I would color it DeepSkyBlue (#00bfff). None of these yet either. And eventually if there are NETs that went green, but years later have still not come to pass I may start coloring them LightSkyBlue (#87cefa).

In summary then: Green splashes mean I got things exactly right. Red means provably wrong and that I was too pessimistic. And blueness will mean that I was overly optimistic.

So now, here are the updated tables.

Self Driving Cars

Oh gosh. The definition of a self driving car keeps changing. When I was a boy, three of four years ago, it meant that the car drove itself. Now it means that a company gives a press release that they have deployed self driving cars, reporters give breathless headlines about deployment of said self driving cars, and then bury deep within the story that actually there is a person in the loop, sometimes in the car itself, and sometimes remotely. Oh, and the deployments are in the most benign public suburbs imaginable, and certainly nowhere that a complete and sudden emergency stop is an unacceptable action. And no mention of restrictions on time of day, or weather, or particular roads within the stated geographical area.

Dear technology reporters, after this table, where almost nothing has changed, save for two comments dated Jan 1st 2021, I’ll give you some questions to ask before you anoint corporate press releases as breaking technology stories. Just saying.

| Prediction [Self Driving Cars] | Date | 2018 Comments | Updates |

|---|---|---|---|

| A flying car can be purchased by any US resident if they have enough money. | NET 2036 | There is a real possibility that this will not happen at all by 2050. | |

| Flying cars reach 0.01% of US total cars. | NET 2042 | That would be about 26,000 flying cars given today's total. | |

| Flying cars reach 0.1% of US total cars. | NIML | ||

| First dedicated lane where only cars in truly driverless mode are allowed on a public freeway. | NET 2021 | This is a bit like current day HOV lanes. My bet is the left most lane on 101 between SF and Silicon Valley (currently largely the domain of speeding Teslas in any case). People will have to have their hands on the wheel until the car is in the dedicated lane. | 20210101 It didn't happen any earlier than 2021, so I was technically correct. But I really thought this was the path to getting autonomous cars on our freeways safely. No one seems to be working on this... |

| Such a dedicated lane where the cars communicate and drive with reduced spacing at higher speed than people are allowed to drive | NET 2024 | ||

| First driverless "taxi" service in a major US city, with dedicated pick up and drop off points, and restrictions on weather and time of day. | NET 2022 | The pick up and drop off points will not be parking spots, but like bus stops they will be marked and restricted for that purpose only. | 20190101 Although a few such services have been announced every one of them operates with human safety drivers on board. And some operate on a fixed route and so do not count as a "taxi" service--they are shuttle buses. And those that are "taxi" services only let a very small number of carefully pre-approved people use them. We'll have more to argue about when any of these services do truly go driverless. That means no human driver in the vehicle, or even operating it remotely. 20200101 During 2019 Waymo started operating a 'taxi service' in Chandler, Arizona, with no human driver in the vehicles. While this is a big step forward see comments below for why this is not yet a driverless taxi service. 20210101 It wasn't true last year, despite the headlines, and it is still not true. No, not, no. |

| Such "taxi" services where the cars are also used with drivers at other times and with extended geography, in 10 major US cities | NET 2025 | A key predictor here is when the sensors get cheap enough that using the car with a driver and not using those sensors still makes economic sense. | |

| Such "taxi" service as above in 50 of the 100 biggest US cities. | NET 2028 | It will be a very slow start and roll out. The designated pick up and drop off points may be used by multiple vendors, with communication between them in order to schedule cars in and out. | |

| Dedicated driverless package delivery vehicles in very restricted geographies of a major US city. | NET 2023 | The geographies will have to be where the roads are wide enough for other drivers to get around stopped vehicles. | |

| A (profitable) parking garage where certain brands of cars can be left and picked up at the entrance and they will go park themselves in a human free environment. | NET 2023 | The economic incentive is much higher parking density, and it will require communication between the cars and the garage infrastructure. | |

| A driverless "taxi" service in a major US city with arbitrary pick and drop off locations, even in a restricted geographical area. | NET 2032 | This is what Uber, Lyft, and conventional taxi services can do today. | |

| Driverless taxi services operating on all streets in Cambridgeport, MA, and Greenwich Village, NY. | NET 2035 | Unless parking and human drivers are banned from those areas before then. | |

| A major city bans parking and cars with drivers from a non-trivial portion of a city so that driverless cars have free reign in that area. | NET 2027 BY 2031 | This will be the starting point for a turning of the tide towards driverless cars. | |

| The majority of US cities have the majority of their downtown under such rules. | NET 2045 | ||

| Electric cars hit 30% of US car sales. | NET 2027 | ||

| Electric car sales in the US make up essentially 100% of the sales. | NET 2038 | ||

| Individually owned cars can go underground onto a pallet and be whisked underground to another location in a city at more than 100mph. | NIML | There might be some small demonstration projects, but they will be just that, not real, viable mass market services. | |

| First time that a car equipped with some version of a solution for the trolley problem is involved in an accident where it is practically invoked. | NIML | Recall that a variation of this was a key plot aspect in the movie "I, Robot", where a robot had rescued the Will Smith character after a car accident at the expense of letting a young girl die. |

I’m still not counting the Waymo taxi service in Chandler, a suburb of Phoenix, as a driverless taxi service. It has finally been opened to members of the public, and has no human driver actually in the car, but it is not a driverless service yet. What about the October 8th headline: Waymo finally launches an actual public, driverless taxi service with lede Fully driverless technology is real, and now you can try it in the Phoenix area. No. It is not fully driverless. When you read down in the story you eventually find that, just as for the last year with a more restricted customer base, there is still a human in the loop. The story proudly proclaims that “[m]embers of the public who live in the Chandler area can hail a fully driverless taxi today”, but a few sentences later say the economics may not work out “because Waymo says the cars still have remote overseers”. That would be a person in the loop. Although Waymo doesn’t give a straight answer of how many cars a single overseer oversees, they refuse to say directly that is more than one. I.e., they are not willing to say that there is less than one full person devoted to overseeing the control of each “driverless” taxi. This is not a driverless taxi service. Tech press headlines be damned. Oh, and by the way, Waymo’s aim was to get to 100 rides per week by the end of 2020. Not exactly a large scale deployment, and not open to the public at any sort of scale, and certainly not driverless.

But what about this December 9th headline: Cruise is now testing fully driverless cars in San Francisco? Again it pays to read the story. Carefully. There is a Cruise employee in the passenger seat of every one of the “fully driverless” cars and they can stop the car whenever they choose. And just to be sure, every vehicle is also monitored by a remote employee. Two safety humans for every one of these so called fully driverless cars. A story from the same day in the Washington Post reports that the testing will only be in the Sunset district of the city, and only on certain streets. SF residents know that that is a very residential area with very little traffic, and four way stop signs at intersections every block. The traffic moves very slowly even when the streets are deserted, and it is always safe to come to a complete stop.

Reporters should ask all companies saying they are testing fully driverless cars, whether as a taxi service or not, the following questions, at least.

- Is there an employee in the “driverless car”? Are they able to stop the car or take over in any way?

- Is there a remote person able to monitor the car? Are they able to take over in any way, even just commanding that the car stop, or being able to set a way point which will let the car get out of a stuck situation? How many cars does such a remote person monitor today (as distinct from aspirations for how many in the future)?

- Is there a chase vehicle following the driverless car to prevent rear end collisions from other random drivers? (I understand that Cruise does this in San Francisco; and I think I have seen it myself with a Waymo vehicle in the last week, but I may have misinterpreted.) If there is a chase vehicle does someone in that vehicle have the ability to command the driverless vehicle to stop? Note that a chase vehicle is a whole second car driven by a person just to enable the driverless car to be safe…

- Are there any restrictions on the time of day that the system operates?

- Will testing change if there is really bad weather or will it go on regardless?

- What is the area that the test extends over, and are there any streets that the cars will not go on?

- If it is a taxi service are there designated pickup and drop off sites, or can those be anywhere? And that includes 3 meters further (regular taxis and rideshare services do this) at the passenger’s request.

- Once a passenger gets in can they change the desired destination? Can they abort the ride and get out whenever they want to?

- Can the passenger communicate with the car (not a remote person) by voice?

Most of these questions will have answers that the PR people, at this point, will not want to be forthcoming about. Sometimes they will be “unable to answer” even when it is a simple yes/no question (e.g., see the Cruise story linked in the questions above)–that is likely not a sign of them not actually knowing the answer…

The only company “that says it has” tested fully driverless cars, with no chase vehicles on California roads is Nuro, but that is a long way from a taxi service since they use special purpose tiny vehicles that can not accommodate even one person.

Towards the end of the year AutoX, a company based in China, has said they are operating a driverless taxi service in a number of cities. First note, however, that the service is not open to the public, and is only available for demonstrations by company employees. They say they have removed both drivers in the cars and remote drivers, but none of the articles I have seen have answered the comprehensive set of questions I have above, including whether there is a person doing remote monitoring rather than remote driving. The one video I have seen is from inside an empty vehicle as it drives on empty(!!) roads. I have spent a lot of time in China in a lot of different cities, and I have never seen an empty road.

I am not saying there is anything wrong with these small steps towards driverless cars. They are sensible, and necessary steps along the way. But if you look at the headlines, and even the first few paragraphs of most stories about driverless cars, they breathlessly proclaim that the future is already here. No it is not. Read the fine print. We are very far from an actual driverless taxi service that can compete with a traditional taxi company (which has to make money), or even one of the ride share companies which themselves are still losing money. The deployed demonstration systems have many more people per car working full time than any of these other systems, are only operating a tiny number of rides per day, and would not be profitable even if each ride was charged at a thousand times that charged by the incumbents. We are still a long, long way from a viable profitable autonomously driven taxi service.

The last two years have seen a real shakeout in expectations for deployment of self driving cars. The most recent example of this is was in December 2020 when Uber sold their self driving car efforts for minus $ 400 million. That’s right. They gave away the division working on self driving cars, once thought to be the way to finally make Uber profitable, and sent along with it $ 400 million in cash. [[… If only they had asked me. I might have bought it for minus $ 350 million! A relative bargain for Uber!]]

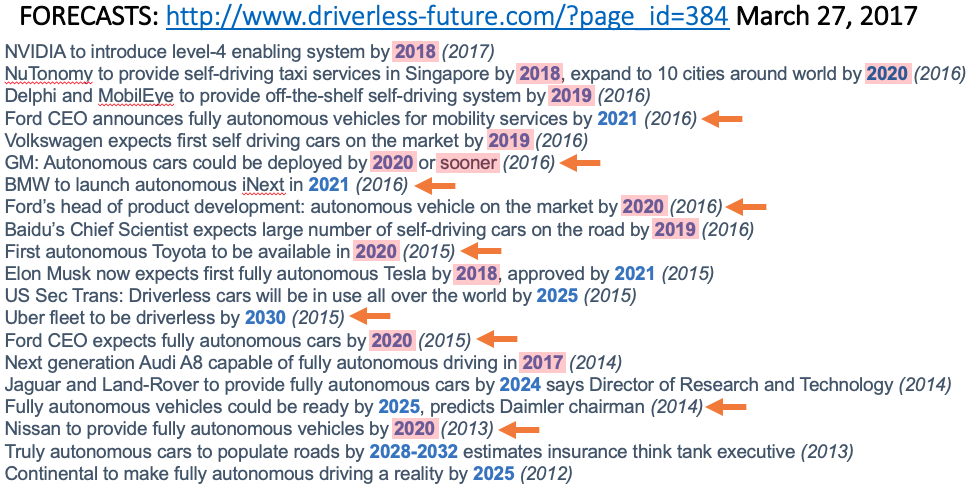

To illustrate how predictions have been slipping, here is a slide that I made for talks, based on a snapshot of predictions about driverless cars from March 27, 2017. The web address still seems to give the same predictions with a couple more at the end that I couldn’t fit on my slide. In parentheses are the years the predictions were made, and in blue are the dates for when the innovation was predicted to happen. The orange arrows point at predictions that I have since seen companies retract. I may have missed some retractions. The dates highlighted in red have passed, without coming to fruition. None of the other predictions have so far borne fruit.

In summary, twelve of the twenty one predictions have passed and vanished into the ether. Of the remaining nine I am confident that the three for 2021 will also not be attained. And then there are none that come due until 2024. I think it is fair to say that predictions for autonomous vehicles in 2017 were wildly overoptimistic. My blog posts of January 2017 and July 2018 tried to tamp them down by talking about deployment issues that were not being addressed, and that, in my opinion, needed large amounts of research to solve. It turns out that before you get to my concerns just the long tail of out of the ordinary situations, and not even the really long tail, just some pretty common aspects of normal driving in most cities and on freeways, have delayed the revolution.

Two more car topics

A year ago, in my update at the start of 2020, I had a little rant about a prediction that Elon Musk had made about how there would be a million Tesla robo-taxis on the road by the end of 2020. His prediction certainly helped Tesla’s stock price. Here is my rant.

At the same time, however, there have been more outrageously optimistic predictions made about fully self driving cars being just around the corner. I won’t name names, but on April 23rd of 2019, i.e., less than nine months ago, Elon Musk said that in 2020 Tesla would have “one million robo-taxis” on the road, and that they would be “significantly cheaper for riders than what Uber and Lyft cost today”. While I have no real opinion on the veracity of these predictions, they are what is technically called bullshit. Kai-Fu Lee and I had a little exchange on Twitter where we agreed that together we would eat all such Tesla robo-taxis on the road at the end of this year, 2020.

</rant>

Well, surprise!, everyone. 2020 has come and gone and there are not a million Tesla robo-taxis on the road. There are zero. I, and Kai-Fu Lee, both had complete confidence in our predictions of zero. And we were right. Don’t worry. Undaunted by complete and abject failure to predict accurately, twice again, Elon has new predictions. In July he predicted level 5 autonomy in 2020, and in December he made predictions of full autonomy in 2021, with his normal straight face. Those predictions have again have pumped up Tesla’s stock price. Never give up on a winning strategy!

Finally I want to say something about flying cars. As with “self driving cars” the definition has been changing as PR departments for various companies try to claim progress in this domain. My predictions were about cars that could drive on roads, and could take to the air and fly when their driver wanted them to. Over the last couple of years “flying car” has been perverted to mean any small one or two person flying machine, usually an oversize quadcopter or octodecacopter, or some such, that takes off and lands and then stays exactly where it is until it takes off again. These are not cars. They are helicopters. With lots of rotors. They are not flying cars.

Artificial Intelligence and Machine Learning

I had not predicted any big milestones for AI and machine learning for the current period, and indeed there were none achieved. In the world of AI hype that is a bold statement to make. But I really do believe it. There have been overhyped stupidities, and I classify GPT-3 as one, see the analysis at the end of this section.

No research results from this last year are easily understood by someone not in the thick of the actual research. Compare this to the original introduction of the perceptron, or back propagation, or even deep learning. Geoff Hinton was able to convey the convergence of three of four ideas that came to be known as deep learning, in a single one hour lecture, that a non-specialist could understand and appreciate. There have been no such innovations in the last year. They are all down in the weeds.

I am not criticizing research being down in the weeds. That is how science and engineering gets done. Lots and lots of grunt work making incremental improvements. But thirty years from now we probably won’t be saying, in a positive way, “remember AI/ML advances in 2020!” This last year will blend and become indistinguishable from many adjacent years. Compare that to 2012, the first time Deep Learning was applied to ImageNet. The results shocked everyone and completely changed two fields, machine learning and computer vision. Shock and awe that has stood the test of time.

In last year’s review I had some thoughts and links to things such as the growing carbon foot print of deep learning, on comparison to how quickly people learn, on non generalizability of games that are learned with reinforcement learning. I also argued why game playing has been such a success for DeepMind, and why it will probably not generalize as well as people expect. These comments occurred both just before and just after last year’s version of the table below. I stand by all those comments and invite you to go look at them.

In the table below I have added only three items, two of which relate to how people look at AI and ML, and one to a missing capability, rather than technical advances.

| Prediction [AI and ML] | Date | 2018 Comments | Updates |

|---|---|---|---|

| Academic rumblings about the limits of Deep Learning | BY 2017 | Oh, this is already happening... the pace will pick up. | 20190101 There were plenty of papers published on limits of Deep Learning. I've provided links to some right below this table. 20200101 Go back to last year's update to see them. |

| The technical press starts reporting about limits of Deep Learning, and limits of reinforcement learning of game play. | BY 2018 | 20190101 Likewise some technical press stories are linked below. 20200101 Go back to last year's update to see them. |

|

| The popular press starts having stories that the era of Deep Learning is over. | BY 2020 | 20200101 We are seeing more and more opinion pieces by non-reporters saying this, but still not quite at the tipping point where reporters come at and say it. Axios and WIRED are getting close. 20210101 While hype remains the major topic of AI stories in the popular press, some outlets, such as The Economist (see after the table) have come to terms with DL having been oversold. So we are there. |

|

| VCs figure out that for an investment to pay off there needs to be something more than "X + Deep Learning". | NET 2021 | I am being a little cynical here, and of course there will be no way to know when things change exactly. | 20210101 This is the first place where I am admitting that I was too pessimistic. I wrote this prediction when I was frustrated with VCs and let that frustration get the better of me. That was stupid of me. Many VCs figured out the hype and are focusing on fundamentals. That is good for the field, and the world! |

| Emergence of the generally agreed upon "next big thing" in AI beyond deep learning. | NET 2023 BY 2027 | Whatever this turns out to be, it will be something that someone is already working on, and there are already published papers about it. There will be many claims on this title earlier than 2023, but none of them will pan out. | 20210101 So far I don't see any real candidates for this, but that is OK. It may take a while. What we are seeing is new understanding of capabilities missing from the current most popular parts of AI. They include "common sense" and "attention". Progress on these will probably come from new techniques, and perhaps one of those techniques will turn out to be the new "big thing" in AI. |

| The press, and researchers, generally mature beyond the so-called "Turing Test" and Asimov's three laws as valid measures of progress in AI and ML. | NET 2022 | I wish, I really wish. | |

| Dexterous robot hands generally available. | NET 2030 BY 2040 (I hope!) | Despite some impressive lab demonstrations we have not actually seen any improvement in widely deployed robotic hands or end effectors in the last 40 years. | |

| A robot that can navigate around just about any US home, with its steps, its clutter, its narrow pathways between furniture, etc. | Lab demo: NET 2026 Expensive product: NET 2030 Affordable product: NET 2035 | What is easy for humans is still very, very hard for robots. | |

| A robot that can provide physical assistance to the elderly over multiple tasks (e.g., getting into and out of bed, washing, using the toilet, etc.) rather than just a point solution. | NET 2028 | There may be point solution robots before that. But soon the houses of the elderly will be cluttered with too many robots. | |

| A robot that can carry out the last 10 yards of delivery, getting from a vehicle into a house and putting the package inside the front door. | Lab demo: NET 2025 Deployed systems: NET 2028 | ||

| A conversational agent that both carries long term context, and does not easily fall into recognizable and repeated patterns. | Lab demo: NET 2023 Deployed systems: 2025 | Deployment platforms already exist (e.g., Google Home and Amazon Echo) so it will be a fast track from lab demo to wide spread deployment. | |

| An AI system with an ongoing existence (no day is the repeat of another day as it currently is for all AI systems) at the level of a mouse. | NET 2030 | I will need a whole new blog post to explain this... | |

| A robot that seems as intelligent, as attentive, and as faithful, as a dog. | NET 2048 | This is so much harder than most people imagine it to be--many think we are already there; I say we are not at all there. | |

| A robot that has any real idea about its own existence, or the existence of humans in the way that a six year old understands humans. | NIML |

There have been many criticisms in the popular press over the last year about how AI is not living up to its hype. Perhaps the most influential such one is from the The Economist. The headline was: An understanding of AI’s limitations is starting to sink in, with a lede of After years of hype many people feel that AI has failed to deliver. Such rationality has not stopped breathless other stories in outlets that should know better, such as the AAAS journal Science, and sometimes even in Nature. The ongoing amount of hype is depressing. And it is mostly inaccurate.

Attention

In the table above I mentioned that both common sense and attention have surfaced as something that current ML methods are lacking and at which they do not do well.

Although learning everything from scratch has become a fetish in ML (see Rich Sutton and my response, both from 2019) there are advantages to using extra built-in machinery. And of course, when ever any ML-er shows off their system learning something new they have been the external built-in machinery. They, the human, chose the task, the reward, and the data set or simulator, with what was included and what was not. Then they say “see, it did it all by itself!”. Ahem.

We have seen reinforcement learning (RL) being used to learn manipulation tasks, but they are both contrived, by the humans writing the papers, and can be extraordinarily expensive in terms of cloud computation time. How do children learn to manipulate? They do it in evolutionarily pre-guided ways. They learn simple grasps, then they learn the pinch between forefinger and thumb, and along the way they learn to direct force to those grasps from their arm muscles. And in some seemingly magical way, they are able to use these earlier skills as building blocks.

By the time they are 18 months (and I just observed this in my own grandson), presented with a door locked with an unknown mechanism (four different instances in this particular anecdote), children use affordances to know where to grasp and how to grasp. They ignore applying arbitrary forces to the wood of the door, except to try to pull it open, testing whether they have gotten the lock in the unlocked state, and instead apply seemingly random forces and motions (just like a machine RL learner!) to just the lock mechanism, experimenting with different grasps and forces. They direct their manipulation attention to the visible lock mechanism, rather than treating the whole thing as one big point cloud as a machine RL learner might. They know where to pay attention to.

Of course this strategy might fail, and that is precisely the allure of secret lock wooden boxes. But usually it works. And the search space for the learner is a couple of orders of magnitude smaller than the “learn everything” approach. When the bill for cloud computer time is tens of millions of dollars, as it is for some “advances” promoted by large companies, an order of magnitude or two can make a real cash difference.

We need to figure out the right mechanisms for attention and common sense and build those into our learning systems if we are to build general purpose systems.

GPT-3 (or the more things change the more they are the same…)

Will Bridewell has likened GPT-3 to a ouija board, and I think that is very appropriate. People see in it what they wish, but there is really nothing there.

<rant>

GPT-3 (Generative Pre-Trained Transformer 3) is a BFNN (that’s a technical term) that has been fed about 100 billion words of text from many sources. It clusters them, and then when you give it a few words it rambles on completing what you have said. Reporters have seen brilliance in these rambles (one should look at the reports about Eliza from the 1960’s), and some have gone as far as taking various output sentences, putting them together in an order chosen by the journalist, and claiming that the resultant text is what GPT-3 said. Disappointingly, The Guardian did precisely this getting GPT-3 to generate 8 blocks of text of about 500 words each, then the journalists edited them together to about 1,100 words and said that GPT-3 had written the essay. Which was true in only a very sort-of way. And some of these journalists (led on by the PR people at Open AI, a misnamed company that produced GPT-3) go all tingly about how it is so intelligent that it is momentous and dangerous all at once; oh, please, the New York Times, not you too!! This is BS (another technical term) plain and simple. Yes, I know, the Earth is flat, vaccines will kill you, and the world is run by pedophiles. And if I wasn’t such a bitter pessimist I would hop on the GPT-3 train of wonder, along with all those glorious beliefs promoted by a PR department.

Not surprisingly, given the wide variety of sources of its 100 billion training words, GPT-3’s language is sometimes sexist, racist, and otherwise biased. But people who believe we are on the verge of fully general AI see the words it generates, which are rehashes of what it has seen, and believe that it is intelligence. A ouija board. Some of its text fragments have great poetry to them, but they are often unrelated to reality, and if anyone is even a little skeptical of it, they can input a series of words where its output clearly shows it has zero of the common human level understanding of those words (see Gary Marcus and Ernie Davis in Technology Review for some examples). It just has words and their relationships with no model of the world. The willfully gullible (yes, my language is harsh here, but the true believers or journalists who rave about GPT-3 are acting like cult followers–it is a mirage, people!) see true intelligence, but its random rants that it produces (worse than my random rants!) will not let it be part of any serious product.

And finally, GPT-n was actually invented, using neural networks, back in 1956. It just needed more and bigger computers to fool more of the people more of the time. In that year, Claude Shannon and John McCarthy published a set of papers (by thirteen mostly very famous authors including themselves, John von Neumann, and Marvin Minsky) titled Automata Studies, under the imprint of Princeton University Press (Annals of Mathematical Studies, Number 34).

I love that the first paragraph of their preface begins and ends:

Among the most challenging scientific questions of our time are the corresponding analytic and synthetic problems. How does the brain function? Can we design a machine which will simulate a brain? … Currently it is fashionable to compare the brain with large scale electronic computing machines.

Apart from the particular sparse style of their language, an equivalent passage could easily be thought to have been written by any number of modern researchers, sixty four years later.

On page vi of their preface they criticize a GPT-n like model of intelligence, one that is based on neural networks (the words used in their volume) and able to satisfy the Turing Test (not yet quite called that) without really being a thinking machine:

A disadvantage of the Turing definition of thinking is that it is possible, in principle, to design a machine with a complete set of arbitrarily chosen responses to all possible input stimuli (see, in this volume, the Culbertson and the Kleene papers). Such a machine, in a sense, for any given input situation (including past history) merely looks up in a “dictionary” the appropriate response. With a suitable dictionary such a machine would surely satisfy Turing’s definition but does not reflect our usual intuitive concept of thinking.

The machine they refer to here, a neural network, is just like the trained GPT-3 network, though Culbertson and Kleene, in 1956, had not figured out how to train it.

Steven Kleene was a very famous logician and academic Ph.D. sibling of Alan Turing, under Alonzo Church. James Culbertson is a forgotten engineer who speculated on how to build intelligent machines using McCulloch-Pitts neurons, here and elsewhere–I rehabilitate him a little in my upcoming book.

S. C. Kleene, Representation of Events in Nerve Nets and Finite Automata, pp 3-41.

James T. Culbertson, Some Uneconomical Robots, pp 99-116.

</rant>

AlphaFold

Finally, I want to mention AlphaFold, which right at the end of the year got incredible press, breathless incredible press I would say, about “solving” an outstanding question in science.

Given a sequence of amino acids making up a protein, how will that protein fold due to physical forces between parts of nearby acids? This is a really important question. The three dimensional structure, what is inside, and what is on the outside, and what adjacencies are there on the surface of the folded molecule, determines how that protein will interact with other molecules. Thus it gives a clue as to how drugs will interact with a protein. People in Artificial Intelligence have worked on this since at least the early 1990’s (e.g., see the work of Tomás Lozano-Pérez and Tom Dietterich).

AlphaFold (from DeepMind, the subsidiary of Google that is losing the better part of a billion dollars per year) is a real push forward. But it does not solve the problem of predicting protein folds. It is very good at some cases, and very poor at other cases, and you don’t know which it is unless you know the real answer already. You can see a careful (and evolving) analysis here. It is long so you can skip to the four paragraphs of conclusion to hear about both the goods and the bads. It likely will not revolutionize drug discovery without a lot more work on it, and perhaps never.

While AlphaFold is another interesting “success” for machine learning, it does not advance the fields of either AI or ML at all. And its long term impact is not yet clear.

Space

In 2020 SpaceX came back from the explosion of a Crewed Dragon capsule that happened during a ground test on April 20th, 2019. They were able to launch their first two people to space aboard Demo-2 on May 30th, 2020. Bob Behnken and Doug Hurley, both NASA astronauts, docked with the ISS on May 31st, and stayed there until their return and splashdown on August 2nd. On November 16th, Crew-1 launched with three NASA and one JAXA astronauts on board, and is currently docked to ISS where the astronauts are working.

Boeing’s entry into manned space flight, the CST-100, is behind SpaceX. A software problem in December 2019 meant that an unmanned flight did not make it to the ISS, though it did return safely to Earth. In April of 2020 Boeing announced it would refly the unmanned test in the fourth quarter of 2020. That has now slipped to the first quarter of 2021 due to further software issues. I think Boeing is one glitch away from not to getting a manned flight in 2021. So my optimism for them is going to be tested.

There was only one attempt at a manned suborbital flight in 2020 but it did not get to space. On December 12th the rocket motor of Virgin Galactic’s SpaceShipTwo Unity did not ignite after the craft was dropped as planned from its mothership at over 40,000 feet in altitude.

On October 13th, 2020, Blue Origin’s New Shepard 3 had its 7th successful unmanned test flight. A new craft, New Shepard 4, was slated to undergo an unmanned test flight in the last two months of the year, as the 14th test flight of a New Shepard system. That did not happen. New Shepard 4 is currently expected to fly the first manned mission for Blue Origin.

| Prediction [Space] | Date | 2018 Comments | Updates |

|---|---|---|---|

| Next launch of people (test pilots/engineers) on a sub-orbital flight by a private company. | BY 2018 | 20190101 Virgin Galactic did this on December 13, 2018. 20200101 On February 22, 2019, Virgin Galactic had their second flight, this time with three humans on board, to space of their current vehicle. As far as I can tell that is the only sub-orbital flight of humans in 2019. Blue Origin's new Shepard flew three times in 2019, but with no people aboard as in all its flights so far. 20210101 There were no manned suborbital flights in 2020. |

|

| A few handfuls of customers, paying for those flights. | NET 2020 | 20210101 Things will have to speed up if this is going to happen even in 2021. I may have been too optimistic. | |

| A regular sub weekly cadence of such flights. | NET 2022 BY 2026 | ||

| Regular paying customer orbital flights. | NET 2027 | Russia offered paid flights to the ISS, but there were only 8 such flights (7 different tourists). They are now suspended indefinitely. | |

| Next launch of people into orbit on a US booster. | NET 2019 BY 2021 BY 2022 (2 different companies) | Current schedule says 2018. | 20190101 It didn't happen in 2018. Now both SpaceX and Boeing say they will do it in 2019. 20200101 Both Boeing and SpaceX had major failures with their systems during 2019, though no humans were aboard in either case. So this goal was not achieved in 2019. Both companies are optimistic of getting it done in 2020, as they were for 2019. I'm sure it will happen eventually for both companies. 20200530 SpaceX did it in 2020, so the first company got there within my window, but two years later than they predicted. There is a real risk that Boeing will not make it in 2021, but I think there is still a strong chance that they will by 2022. |

| Two paying customers go on a loop around the Moon, launch on Falcon Heavy. | NET 2020 | The most recent prediction has been 4th quarter 2018. That is not going to happen. | 20190101 I'm calling this one now as SpaceX has revised their plans from a Falcon Heavy to their still developing BFR (or whatever it gets called), and predict 2023. I.e., it has slipped 5 years in the last year. |

| Land cargo on Mars for humans to use at a later date | NET 2026 | SpaceX has said by 2022. I think 2026 is optimistic but it might be pushed to happen as a statement that it can be done, rather than for an pressing practical reason. | |

| Humans on Mars make use of cargo previously landed there. | NET 2032 | Sorry, it is just going to take longer than every one expects. | |

| First "permanent" human colony on Mars. | NET 2036 | It will be magical for the human race if this happens by then. It will truly inspire us all. | |

| Point to point transport on Earth in an hour or so (using a BF rocket). | NIML | This will not happen without some major new breakthrough of which we currently have no inkling. | |

| Regular service of Hyperloop between two cities. | NIML | I can't help but be reminded of when Chuck Yeager described the Mercury program as "Spam in a can". |

As noted in the table SpaceX originally predicted that they would send two paying customers around the Moon in 2018, using Falcon 9 Heavy as their launch vehicle. During 2018 they revised that to a new vehicle, now known as Starship, with a date of 2023. Progress is being made on Starship, and the 55m long second stage flew within the atmosphere for more than a simple hop in 2020. It did controlled aerodynamic flight as it came into land, and impressively and successfully flipped to vertical for a landing. There were some fuel control issues and it did not decelerate enough for a soft landing, and blew up. This is real and impressive progress.

So far we haven’t seen the 64m first stage, though there are rumors of a first flight in 2021. This will be a massive rocket, and given the move fast and blow up strategy that SpaceX has very successfully used for the second stage, we should expect its development to have some fiery moments.

A 55m long vehicle to loop around the Moon seems like a bit of overkill, but that could be the point. However, I will not be surprised at all if the mission slips beyond 2023. If fantastic progress happens in 2021 I will get more confident about 2023, but 2021 will have to be really spectacular.

While I agree with almost all of the questions you are posing to the self-driving companies, I am not sure about the requirement that the passenger is allowed to communicate with the car by voice. We’ll probably have 100% reliable voice assistants before we have self-driving cars, but we don’t use our voices to control our existing level-2 automated cars, and I can’t see why that’s a requirement for self driving.

I am not saying that it is a requirement for self driving. But I think it is going to be very useful for a self driving taxi service. We all communicate by voice with our taxi and ride share drivers in order to update directions, jump out early, vector into a precise drop off when we have heavy luggage, etc. We may not like being a captive of a self driving taxi if we can not change our minds.

Thanks for taking the time to make these predictions and to be so concrete about them. It’s incredibly valuable and I wish more people would do what you’re doing.

What do you think about some of the recent claims of self-driving taxis in China? See e.g. https://www.cnn.com/2020/12/03/tech/autox-robotaxi-china-intl-hnk/index.html

“In Shenzhen, AutoX has completely removed the backup driver or any remote operators for its local fleet of 25 cars, it said. The government isn’t restricting where in the city AutoX operates, though the company said they are focusing on the downtown area.”

I already have a paragraph in this post about it. Search for “AutoX”.

Hi Dr. Brooks, thanks for these ongoing predictions and notes!

Even with millions of dollars and blind search (on how to open a door by blindly trying everything, not just lock fiddling), there are still many more issues, right?

Eg. the RL learner has no understanding of how/why it worked – can’t articulate it meaningfully (eg in terms of gesture sequences), can’t summarize the steps, can’t generalize, can’t transfer (adapt) to a somewhat different lock, can’t invent a better mechanism, use prior knowledge/experience/memory to do a bit of ‘figuring out’ (reasoning), can’t make it (design) harder/easier… These are all things a human could do, on account of having had direct, interactive, physical experiences with all manners of things, including ones unrelated to locks.

AND, a human could have observed someone else opening the door – even a vague memory of the motions involved might be useful…

“And finally, GPT-n was actually invented, using neural networks, back in 1956. ”

.

Absurd.

“The machine they refer to here, a neural network, is just like the trained GPT-3 network, though Culbertson and Kleene, in 1956, had not figured out how to train it.”

It turns out training neural networks is the hard part, especially deep NNs. You treat it as if it’s some minor detail that could be farmed out to a grad student. It’s true that GPT overhyped in the media, which you gleefully spend all of your time dunking on while ignoring expert commentary and insights.

Could you at least address the scaling properties of transformers? The fact that GPT-3 can perform arithmetic, solve winograd schemas, etc, all while not being trained explicitly on those problems? Surely this progress worth mentioning.

Absurd? Clearly I don’t think so or I would not have said it. Note that I have promised to update these posts yearly for 29 more years. So if it turns out to have been absurd (or if GPT-3 or transformers turn out to be worth anything) I will swallow my pride and say so–as I did in yesterday’s post about some intemperate remarks I aimed at VCs three years ago.

On effort. I don’t give gold stars here for effort. I give gold stars for advances that make the milestones in column 2 of the tables come true. [Lots of people spend a lot of effort climbing Mt Everest every year, but that does not advance the goal of getting cargo to Mars.] I don’t believe that either GPT-3 or transformers will have significant or sustained long term impact. They will be grouped with many, many other things that were going to completely change both AI and our world, including adalines, Conniver, AM, the primal sketch, Bacon.5, Cyc, Deep Blue, Watson, and AlphaZero. In my strongly held opinion they will never join the pantheon of reinforcement learning, A*, alpha-beta cutoff, back propagation, slots and fillers, 3-SAT, SLAM, or deep learning.

And yes, I do dunk on overhype in the media. That is why I posted my predictions three years ago. It was because the hype in the media was making people think that things were just around the corner, that it turns out were not. Most clearly this has happened in autonomous vehicles where billions of dollars were spent in reaction to those hyped up predictions. In yesterday’s post I referred to two of my blog posts in 2017 and 2018 which addressed those issues directly. Tamping down the hype is the point of my 2018 predictions and the point of my updates. If that is being too “gleeful” for you, I suggest not reading my posts–it is why I write them.

For more information I suggest looking through my posts. There is a long essay on Machine Learning Explained that gives the history of the invention of reinforcement learning by Donald Michie in 1959, and another on the Seven Deadly Sins of AI Predictions which explains why so many people get it wrong, mistaking specific performances for general capabilities — I think that is what all people who are excited about GPT-3 are doing.

[[BTW I did not mention graduate students, and I certainly would never talk about them in a pejorative way, or assign what I thought were minor tasks to them. Graduate students have always been my most highly respected colleagues. Our greatest advances often come from graduate students, in either their masters or PhD work. E.g., Alan Turing (computability), Claude Shannon (Boolean algebra applied to circuits), Jim Slagle (symbolic mathematics by computer), John Canny (edge detectors), Christopher Watkins (Q Learning), Larry Page (page rank), etc., etc.]]

Jay, GPT-3’s computations don’t amount to intelligence.

My calculator can perform arithmetic, so maybe I should want to have coffee with it 🙂

Fooling is fooling, regardless of how amazing the fooling seems. GPT-3 cannot possibly *understand* a thing about the world it waxes eloquently about – very simply because it does not live in it, so, does not experience it like you and I do

Words are not merely words, they are used to convey meaning. Humans pick up language from scratch, at the same time they experience the world; GPT-3 did not.

How many of these can GPT-3 do [search ‘Bloom’s Taxonomy verbs’]?

https://images.slideplayer.com/16/5019841/slides/slide_3.jpg

All these are measurable, and, they are what we use to measure human mastery.

Re: “Absurd”.

In their brand new Stochastic Parrots (what a cutting turn of phrase!) paper, Bender, Gebru et al, also look into the origins of the ideas behind GPT-3, and its brethren.

http://faculty.washington.edu/ebender/papers/Stochastic_Parrots.pdf

They attribute the idea of Language Models (LMs) to Claude Shannon (their reference 117) in his 1949 book on the theory of communication. They give (section 2) a long and detailed history of LMs, and conclude: “Nonetheless, all of these systems share the property of being LMs in the sense we give above, that is, systems trained to predict sequences of words (or characters or sentences). Where they differ is in the size of the training datasets they leverage and the spheres of influence they can possibly affect.”

In my post I attribute the first description of two proposed implementations using neural networks to Claude Shannon and John McCarthy, in 1956.

<troll>

Are you saying that I was absurd because I described the invention as sixty four years old (in 2020) when others describe it as seventy one years old?

</troll>

Combining your two points of “First dedicated lane where only cars in truly driverless mode …” and “Individually owned cars can go underground onto a pallet and be whisked underground …”, I think a road system might be set up such that cars are only allowed into it if they are capable of fully ceding control to the external system while within it’s bounds.

Eg Take a new tunnel with entry lane controls that only allows cars with the correct transponders fitted to enter; as cars enter they cede all control to the tunnel which drives them at high speed via the same transponder; the driver regains control as they are spat out at their desired exit.

This is relatively easy to do because the car is not autonomous in any sense, there’s cars on the road now that could have this sort system retrofitted even by over-the-air update. There is also the financial carrot of higher cars/hr for given infrastructure spend.

Not disputing your predictions, just there might be a third way that achieves similar outcomes for minimal effort. So I’m calling it as BY 2025 if there is someone with the will to do it (and push though the regulations).

Great list, nowhere to hide if you get it wrong 🙂

DB

I agree that this is plausible technically. I just don’t see that it will, in the time frame, have enough benefit for there to be sufficient market pull for it. Building a tunnel is a lot more expensive than using an existing lane on an existing freeway, so it would be much more difficult to fit in a business model. So I am going to say probably not in this time frame.

What I proposed is almost ‘hardware complete’ with Boring Co’s Las Vegas tunnel https://www.boringcompany.com/lvcc. It is really a subway system using cars instead of trains. Currently it’s just three stations but they recently announced it will expand to 29 miles of tunnel and 51 stations.

At the moment the tunnel cars are driven by human drivers. But the cars have all the actuation needed to make them just a software update away from turning the drivers into a safety observers, then later removing them entirely. Given the restricted environment, the software update should be considerably less complex than that required for open road autonomy … that’s if the car is in control – if the tunnel is in control it’s already been done.

The missing piece from my idea is that there is no on-ramping or off-ramping of external cars into/out of the tunnels. One way to do this would be with carparks. So as you go in and take your ticket, that is the point where you cede control of your car to the system and gain it back where you pay on exit. The car would then drop you to the closest pedestrian access to your destination and park your car … somewhere. When you’ve done your business you call your car to pick you up and continue on your merry way. It would work particularly well if hotels, airports, businesses, etc could connect their already built carparks to the system with stub lines.

As you say, whether it happens is not just a technical question, there’s also finances, regulations, motivation, etc. But while they are more expensive I think tunnels might do this before a dedicated freeway lane, just because the nimbyism factor will be much less. And there is a certain transport obsessed industrialist who actually has all the puzzle pieces already built and under his direct control right now.

All the best. DB

I think that Agility Robotics Digit meets the “lab demo” criterion for delivering a box from car to front door, which it did in 2019. Some of this demo is canned, but there has been real progress there.

https://www.youtube.com/watch?v=u7e4WbIyakU

No, not even remotely. That video is a concept video, made by Ford’s PR department, not a video of anything actually working at all. It’s a movie. It’s like saying that a Star Trek episode is a lab demo of a warp drive.

Concept videos are important. Here is Apple’s concept video for Siri: https://www.youtube.com/watch?v=p1goCh3Qd7M

I saw this video at conferences year after year and it really was a vision that guided research work in speech interaction, in knowledge representation, in automated calendars, etc., for AI researchers everywhere.

The video was made in 1987. Siri was released in 2010. Twenty three years later. Still today, eleven years after Siri’s first release Siri can not do many of the things in the now thirty four year old concept video.

But it was a revolutionary and world changing video. Concept videos can be fantastic drivers. But they are not lab demos.

Concept videos imagine how things will appear to outside observers. But they do not even identify or enumerate the hard problems that need to be solved in order for a real product to be be built. Lab demos not only enumerate the hard problems, they demonstrate autonomous systems solving those hard problems for real.

Here is a quick enumeration of the hard problems that would have to be solved to make this movie be a lab demo. None of these steps have ever been done even in a lab.

1. Deployable autonomous driving, including choosing a parking spot at the right place to give access to the house — on top of autonomous driving this requires the vehicle to build a model of the house as it pulls up and from that decide the right parking spot — this includes solving problems 3 and 4 below.

2. The robot has to look out the back opening of the truck and autonomously figure out where and how to get out of the truck in a way that it will be stable on the ground.

3. Some system, in the truck or robot, has to deduce where an appropriate spot would be to leave the package — this involves an understanding of house design and likely human behavior — e.g., hiding it under a bush will not make it visible to the human who will notice the package at other places, e.g., on the stoop.

4. Plan a path from the back of the truck to the drop off point, based on a three dimensional map of local world. This is closest to a lab demo, and could be doable in 2021.

5. Operate autonomously to follow that path, and to dynamically deal with unseen obstacles, and figure out how to respond to dogs, humans, etc., along the way (not shown in this particular idealized movie — real life is never a movie).

6. Have a recovery strategy to be able to get up from a fall, including being able to re pick up, and re orient that package. Those of us who have been up close to an Agility Robot being tele-operated have all seen them fall, and fall hard and dangerously, and know that a manual crane is currently needed to just get the robot up again, let alone deal with the dropped package. This is not a criticism of Agility — it is simply an acknowledgement of the current reality.

Bottom line. Movies are great. Movies are not about technology readiness.

This is brilliant stuff and accords with the contents of my book ‘Driverless cars: on a road to nowhere?’ obtainable via my website and published by London Partnership Books. My only question, Rodney, is one I have asked constantly over the five years I have written about this subject: how do they get away with the hype? What idiots on the stock market believe Musk when he says you can leave your car overnight and it will be whisked off to the nearest city to act as a driverless taxi and return to your driveway the next morning. It is beyond fantasy.

Good questions. That is why I write these blog posts, to try to shake people into reality. You can see from the comments that I do approve that many people are completely confused about reality even after reading my posts, so I am not doing a good job apparently. The need to believe in magic is apparently very strong.

BTW, I don’t usually approve the comments that are abusive to me about my total ignorance of how strong AI technology really is, and how I am a total failure in my life. Nor about the “incredibly powerful AI systems that are being built at secret government labs”…

I’m not sure you’re right that transformers will be as easily forgotten as, say, CYC or that poor Automated Mathematician. AlphaFold seems to be based on the transformer architecture and while you say that “it does not solve the problem of predicting protein folds”, many computational biologists seem to think it very much does solve the problem. The article you link discusses some limitations of the approach but for the most part its author seems to be convinced that the problem of protein structure prediction (but not “protein folding”) is solved. For example the article notes: “More importantly, _while AlphaFold 2 provides a general solution for protein structure prediction_, this does not mean that it is universal” (my underlining).

That’s not to say I’m convinced that transformers are the big advance that they’re made to be. Deep learning papers always claim that it’s their proposed architectural tweaks that are responsible for the new state of the art on some benchmark, but this is seldom supported by empirical evidence, e.g. ablation experiments etc. In the meantime, the datasets keep getting bigger and bigger and the architectures deeper and deeper, so there’s a simpler explanation for the improved performance: more data and bigger computers (indeed, wasn’t that the “bitter lesson”?). But that doesn’t matter. AlphaFold performed an impressive feat that won’t be easily forgotten and it uses a transformer architecture, so transformers won’t be easily forgotten.

I think you are right to say that AlphaFold “does not advance the fields of either AI or ML at all”. As far as I can tell, predicting protein structure from sequences is not a hallmark of intelligence. Like you say, AI approaches have been applied on the task (and other bioinformatics tasks) since the 1990’s, but I think the reason is that such tasks are often modelled as language modelling tasks and language modelling itself is a classic AI task; not because performing well at bioinformatics tasks has anything to do with intelligence.

In a way, that AlphaFold is good at protein structure prediction tells us that it most likely isn’t about to develop anything close to human-like intelligence. Humans are awful at protein structure prediction (else we wouldn’t need a computer to do it) so being good at it tells us nothing about the ability to think like a human. I suppose one might argue that the intelligence we can expect deep neural networks to develop is simply in-human and super-human, but that takes us all the way back to the question of why, then, aren’t pocket calculators considered intelligent, when they can perform inhumanly complex calculations at superhuman speeds.

John McCarthy had a phrase about what’s going on: the “look ma, no hands” disease of AI research. He wrote about it in his review of the Lighthill Report: “Someone programs a computer to do something no computer has done before and writes a paper pointing out that the computer did it. The paper is not directed to the identification and study of intellectual mechanisms and often contains no coherent account of how the program works at all”. That covers about 99% of the deep learning publications since 2012. And it’s a shame because early work was actually quite interesting and seemed to be going somewhere. Then it fell victim to its own success, I guess.

Thanks for the very thoughtful and erudite comment. I can always be wrong (as can anyone else trying to predict the future), so hold me to it if transformers turn out to be more long standing than I currently think. I have 29 years in which to repent!

In turn, I thank you for being a voice of reason and sensibility in the midst of all the hype. I’m sorry to hear of all the idiots who write to you with personal insults and barmy theories about secret government labs.

Don’t be too harsh on Musk. Yes, he is excessively optimistic. But it is a different kind of excessive optimism from those who over-hype to get funding. Those who accuse him of fraud don’t understand someone who is not personally motivated by money. The man does not need to over-hype any field to get funding. If anything he is a victim of over-hype. He needs information. It’s up to us to give him better information.

Sometimes things Musk says are taken too literally by people. E.g., naming Tesla software “Fully Self Driving”. Likewise when cities were competing for Amazon to have a big new office there one of them advertised that they would be connected to lots of cities in three years by Hyperloop. Now while it is the people being stupid in believing things that come out of Musk’s mouth he is nevertheless responsible for what he says.